目录

LM Studio:Qwen3 8b

Docker

Dify

参考博文:

windows个人电脑基于Qwen3和Dify搭建的文档问答智能助手_dify 文档问答-CSDN博客

LM Studio 搭建web server

server测试效果:

import requests

import json

url = "http://192.168.1.8:2000/v1/chat/completions"

# url = "http://127.0.0.1:2000/v1/chat/completions"

headers = {

"Content-Type": "application/json"

}

data = {

"model": "qwen3-8b",

"messages": [

{"role": "system", "content": "Always answer in rhymes. Today is Thursday"},

{"role": "user", "content": "What day is it today?"}

],

"temperature": 0.7,

"max_tokens": -1,

"stream": False

}

response = requests.post(url, headers=headers, data=json.dumps(data))

print(response.status_code)

print(response.text)

flask model server

from flask import Flask, jsonify, request

import requests

app = Flask(__name__)

# 你的 LM Studio 地址

LM_STUDIO_BASE = "http://192.168.1.8:2000"

@app.route("/v1/models", methods=["GET"])

def models():

return jsonify({

"object": "list",

"data": [

{

"id": "qwen3-8b",

"object": "model",

"created": 0,

"owned_by": "lmstudio"

}

]

})

@app.route("/v1/<path:path>", methods=["POST"])

def proxy(path):

resp = requests.post(f"{LM_STUDIO_BASE}/v1/{path}", json=request.json)

return (resp.content, resp.status_code, resp.headers.items())

app.run(host="0.0.0.0", port=3001)

#ok

"http://192.168.1.8:3000/v1/models"下载安装LM Studio

LM Studio - Discover, download, and run local LLMs

下载模型那一步右边可以skip,

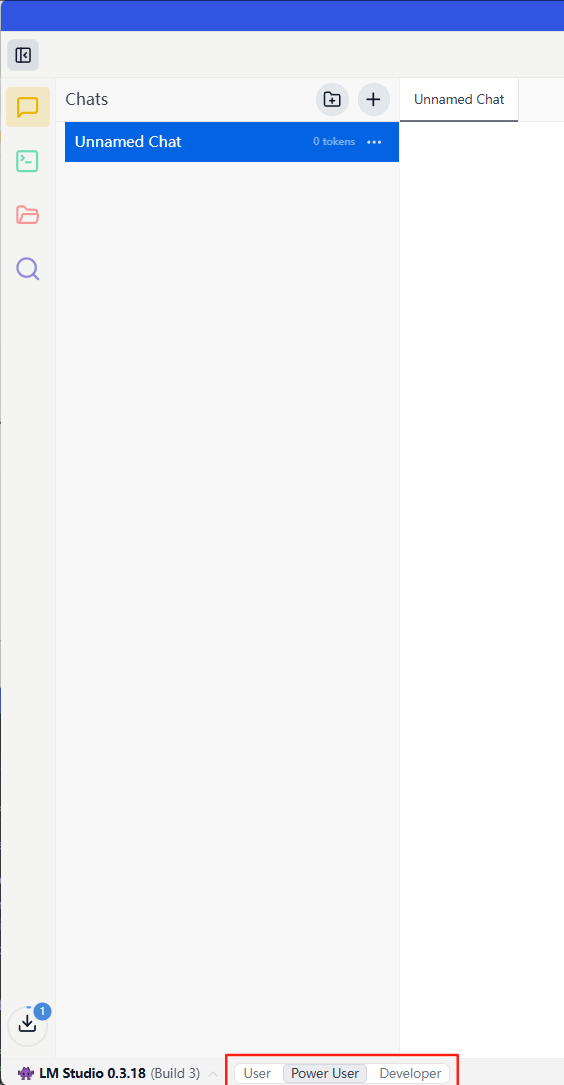

在左下角可以切换 user developer 模式

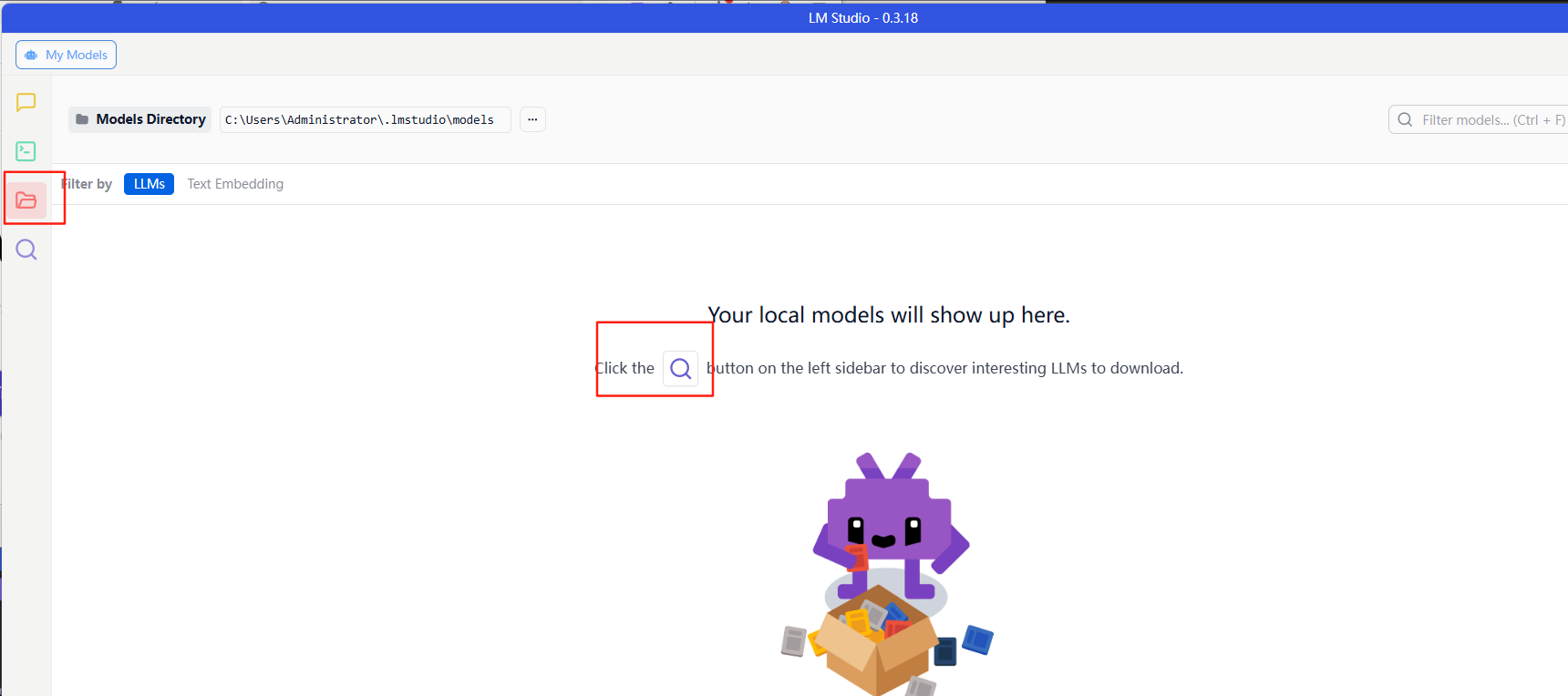

下载模型

左边选择目录,点击屏幕中间的搜索,然后可以选择模型进行下载了:

windows:

下载 dify代码:

https://github.com/langgenius/dify

copy .env.example .env

docker compose up -d

报错:

Bind for 0.0.0.0:80 failed: port is already allocated

linux:

git clone https://github.com/langgenius/dify.git cd dify/docker cp .env.example .env # 复制环境变量模板 nano .env # 编辑关键配置

启动成功后可在docker客户端看到已经启动的容器

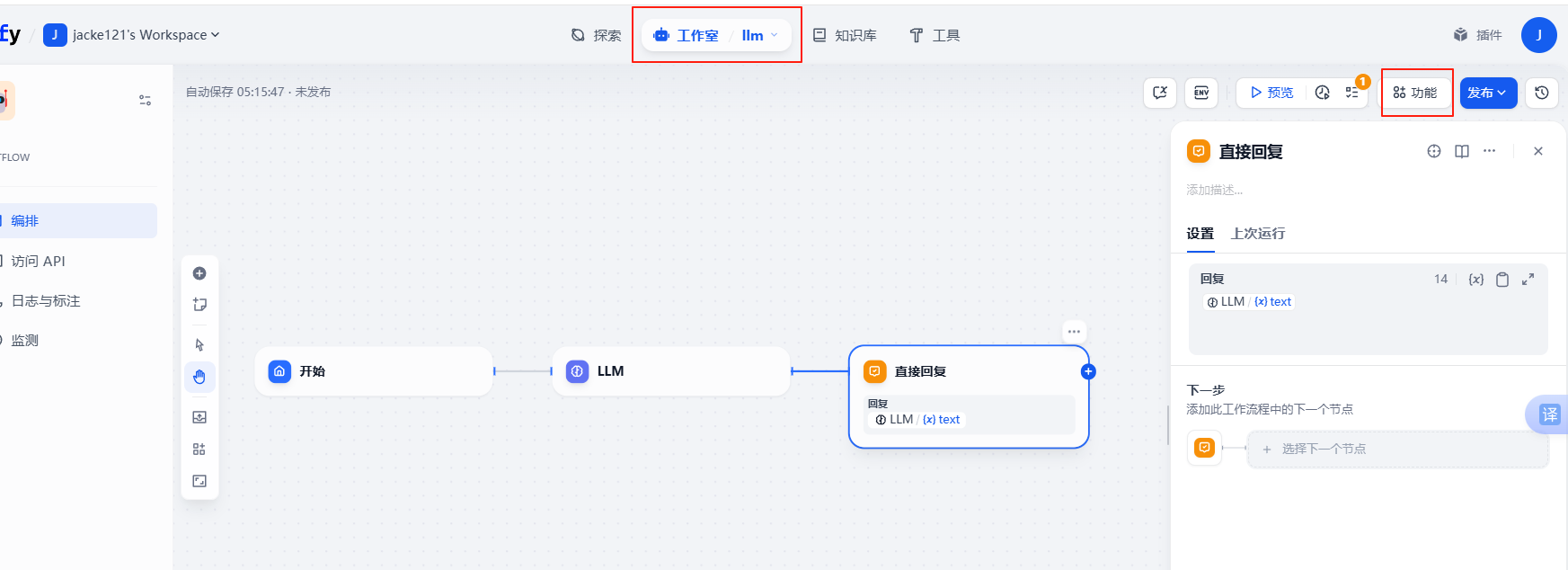

4.Dify创建智能应用

浏览器输入url,注册登录

首次浏览器访问ok

首次访问 404错误:http://localhost/signin

设置完邮箱和用户名之后,访问登录页面ok

404错误:

http://localhost/signin

AI写代码

rust

运行

配置LM studio中的Qwen模型:在设置,模型供应商中安装

OpenAI-API-compatible

下载Qwen3-8B和embedding模型

pip install modelscope

modelscope download --model Qwen/Qwen3-8B --local_dir Qwen3-8B

modelscope download --model maidalun/bce-embedding-base_v1 --local_dir bce-embedding-base_v1

dify 选择模型: