注:本文为 “线性代数 · 直观理解概念” 相关合辑。

英文引文,机翻未校。

中文引文,略作重排。

如有内容异常,请看原文。

直观理解线性代数的一些概念

2015-03-06 Updated: 2015-05-09

本文介绍矩阵的一些相关概念的直观理解,包括行列式、矩阵乘法、特征值、特征向量,这将有助于实际问题的建模与求解。

1. 线性代数与矩阵

线性代数是抽象代数的一个分支,抽象代数研究的对象是代数结构。代数结构可理解为集合+一套运算规则。向量空间(vector space)也叫线性空间(linear space),是代数结构的一种,详情可参考此前博文《代数结构入门:群、环、域、向量空间》。

向量空间由多个向量(即集合元素,向量是既具有数值又具有方向的量)组成,且满足特定的运算规则(这套运算规则称为线性变换,即 linear mappings)。向量空间中的运算对象是向量而非数,其运算性质也不同于初等代数的四则运算。

n 维线性空间中 n 个线性无关的向量可构成该线性空间的一组基,选定一组基后,集合中的每个元素(即向量)就能被唯一表示。试想,在欧几里得三维空间中,选定坐标系(如 [1,0,0]、[0,1,0]、[0,0,1]),空间内的任意一点都可唯一表示为 (a,b,c)。

矩阵(matrix)可视为由一组同维行向量或列向量组成,线性变换(linear transformations)可写成矩阵形式。但值得注意的是,矩阵理论与线性代数存在显著区别:线性代数是在选定一组基的前提下展开讨论的。摘抄 mathoverflow 上的一个回答:

When you talk about matrices, you’re allowed to talk about things like the entry in the 3rd row and 4th column, and so forth. In this setting, matrices are useful for representing things like transition probabilities in a Markov chain, where each entry indicates the probability of transitioning from one state to another.

当你讨论矩阵时,你可以提及诸如第三行第四列的元素等内容。在这种情况下,矩阵可用于表示像马尔可夫链中的转移概率之类的事物,其中每个元素都表示从一种状态转移到另一种状态的概率。

In linear algebra, however, you instead talk about linear transformations, which are not a list of numbers, although sometimes it is convenient to use a particular matrix to write down a linear transformation. However, when you’re given a linear transformation, you’re not allowed to ask for things like the entry in its 3rd row and 4th column because questions like these depend on a choice of basis. Instead, you’re only allowed to ask for things that don’t depend on the basis, such as the rank, the trace, the determinant, or the set of eigenvalues. This point of view may seem unnecessarily restrictive, but it is fundamental to a deeper understanding of pure mathematics.

然而,在线性代数中,你讨论的是线性变换,它并非一组数字,尽管有时使用特定矩阵来描述线性变换会很方便。但当你面对一个线性变换时,你不能去询问诸如它第三行第四列的元素这类问题,因为这类问题的答案取决于基的选择。相反,你只能询问那些不依赖于基的属性,例如秩、迹、行列式或者特征值集合。这种视角看似存在不必要的限制,却是深入理解纯粹数学的基础。

线性关系(Linearity)是指两个变量可表示为一次函数关系,在坐标系中呈现为一条直线,其需满足两个条件:可加性 + 齐次性。维基百科原文如下:

Additivity: f ( x + y ) = f ( x ) + f ( y ) f(x + y) = f(x) + f(y) f(x+y)=f(x)+f(y).

Homogeneity of degree 1: f ( α x ) = α f ( x ) f(αx) = αf(x) f(αx)=αf(x) for all α α α.

2. 从线性方程到矩阵

以如下函数为例,x、y、z 表示 3 种水果的数量,3、4、5 分别表示其单价,那么 F 就表示总价钱。假设购买 x、y、z 分别为 1、2、3 斤,则总价钱为 F ( 1 , 2 , 3 ) = 3 × 1 + 4 × 2 + 5 × 3 = 26 F(1,2,3) = 3\times1 + 4\times2 + 5\times3 = 26 F(1,2,3)=3×1+4×2+5×3=26。

F ( x , y , z ) = 3 x + 4 y + 5 z F(x, y, z) = 3x + 4y + 5z F(x,y,z)=3x+4y+5z

假设现在 3 个人都购买了这 3 种水果,数量分别为 (1,2,3)、(2,1,3)、(3,2,1),总价钱分别为 26、25、22。这可以用矩阵表示(Ax = y):

其中,系数矩阵 A A A 对应水果单价,即 A = [ 3 4 5 ] A = \begin{bmatrix} 3 & 4 & 5 \end{bmatrix} A=[345];矩阵 x x x 为三个人的购买数量,每行代表一个人的购买情况,即 x = [ 1 2 3 2 1 3 3 2 1 ] x = \begin{bmatrix} 1 & 2 & 3 \\ 2 & 1 & 3 \\ 3 & 2 & 1 \end{bmatrix} x= 123212331 ;结果向量 y y y 对应总价钱,即 y = [ 26 25 22 ] y = \begin{bmatrix} 26 \\ 25 \\ 22 \end{bmatrix} y= 262522 。矩阵乘法过程为:

[ 3 4 5 ] × [ 1 2 3 2 1 3 3 2 1 ] = [ 3 × 1 + 4 × 2 + 5 × 3 3 × 2 + 4 × 1 + 5 × 3 3 × 3 + 4 × 2 + 5 × 1 ] = [ 26 25 22 ] \begin{align*} \left[ \begin{matrix} 3 & 4 & 5 \\ \end{matrix} \right]\times \left[ \begin{matrix} 1 & 2 & 3 \\ 2 & 1 & 3 \\ 3 & 2 & 1 \\ \end{matrix} \right] & =\left[ \begin{matrix} 3\times 1+4\times 2+5\times 3 & 3\times 2+4\times 1+5\times 3 & 3\times 3+4\times 2+5\times 1 \\ \end{matrix} \right] \\ & =\left[ \begin{matrix} 26 & 25 & 22 \\ \end{matrix} \right] \end{align*} [345]× 123212331 =[3×1+4×2+5×33×2+4×1+5×33×3+4×2+5×1]=[262522]

显然,线性方程组的求解过程可以通过矩阵运算来表示,从而使得问题更加直观。将线性方程组表示为矩阵形式的主要目的在于简化大规模数据的处理过程,即实现高效的批处理。

从上可看出,矩阵乘法是行乘以列。具体来说,若用矩阵 A A A( m × n m \times n m×n 阶)乘以矩阵 B B B( n × p n \times p n×p 阶)得到矩阵 C C C( m × p m \times p m×p 阶),则矩阵 C C C 中第 i i i 行第 j j j 列的元素 C i j C_{ij} Cij,是由矩阵 A A A 第 i i i 行的所有元素与矩阵 B B B 第 j j j 列的对应元素分别相乘后,再将所得乘积相加得到的,即 C i j = ∑ k = 1 n A i k × B k j C_{ij} = \sum_{k=1}^{n} A_{ik} \times B_{kj} Cij=∑k=1nAik×Bkj。这种运算方式直观体现了矩阵乘法中“行乘列”的核心规则,通过将两个矩阵的元素按特定组合方式计算,实现了从原矩阵到新矩阵的线性变换映射。

进一步,假设物价上涨,x、y、z 的单价分别上涨至 7、8、9,这三个人购买的数量不变,则可得:

此时系数矩阵更新为 A ′ = [ 7 8 9 ] A' = \begin{bmatrix} 7 & 8 & 9 \end{bmatrix} A′=[789],矩阵乘法结果为:

[ 7 8 9 ] × [ 1 2 3 2 1 3 3 2 1 ] = [ 7 × 1 + 8 × 2 + 9 × 3 7 × 2 + 8 × 1 + 9 × 3 7 × 3 + 8 × 2 + 9 × 1 ] = [ 50 49 46 ] \begin{align*} \left[ \begin{matrix} 7 & 8 & 9 \\ \end{matrix} \right]\times \left[ \begin{matrix} 1 & 2 & 3 \\ 2 & 1 & 3 \\ 3 & 2 & 1 \\ \end{matrix} \right] & =\left[ \begin{matrix} 7\times 1+8\times 2+9\times 3 & 7\times 2+8\times 1+9\times 3 & 7\times 3+8\times 2+9\times 1 \\ \end{matrix} \right] \\ & =\left[ \begin{matrix} 50 & 49 & 46 \\ \end{matrix} \right] \end{align*} [789]×

123212331

=[7×1+8×2+9×37×2+8×1+9×37×3+8×2+9×1]=[504946]

与前文相比,此时操作(operation)也扩展到了多维,可进一步实现批处理。若此处仍有困惑,可参考 BetterExplained 的博文《An Intuitive Guide to Linear Algebra》中的“A Non-Vector Example: Stock Market Portfolios”示例。

操作组成的矩阵实为线性变换,即对输入的变换。

3. 线性变换

线性变换(linear transformation)也称为线性映射(linear mappings),是向量空间对应的一套运算规则。

3.1 标量乘法

标量乘法(scalar multiplication)较易理解,即对矩阵的所有元素进行缩放。回顾线性(Linearity)的齐次性(其另一个特性是可加性):

Homogeneity of degree 1: f ( α x ) = α f ( x ) f(αx) = αf(x) f(αx)=αf(x) for all α α α.

也可将其理解为将坐标轴或一组基按相同程度放大或缩小。

3.2 加法

由于是线性的,其满足可加性特性,即:

Additivity: f ( x + y ) = f ( x ) + f ( y ) f(x + y) = f(x) + f(y) f(x+y)=f(x)+f(y).

矩阵加法的定义是将两个矩阵的对应元素相加,这与两个函数相加时相同变量的系数相加类似。矩阵加法满足以下性质:

加法结合律: u + ( v + w ) = ( u + v ) + w u + (v + w) = (u + v) + w u+(v+w)=(u+v)+w

三个矩阵相加时,不论怎样分组,其和不变。加法的单位元:存在零矩阵 0 0 0,对于所有矩阵 v ∈ V v \in V v∈V,有 v + 0 = v v + 0 = v v+0=v

零矩阵是加法的单位元,任何矩阵与零矩阵相加,结果都是原矩阵。加法的逆元素:对于所有矩阵 v ∈ V v \in V v∈V,存在逆矩阵 w ∈ V w \in V w∈V,使得 v + w = 0 v + w = 0 v+w=0

每个矩阵都有一个加法逆元,即存在另一个矩阵,当与原矩阵相加时,结果为零矩阵。加法交换律: v + w = w + v v + w = w + v v+w=w+v

两个矩阵相加的顺序不影响结果。

这些性质确保了矩阵加法的合理性和一致性,是线性代数中矩阵运算的基础。

3.3 矩阵乘法

了解矩阵乘法之前,先从向量乘法入手,事实上,向量可视为只有一行或一列的矩阵(行向量和列向量分别对应于行矩阵和列矩阵)。

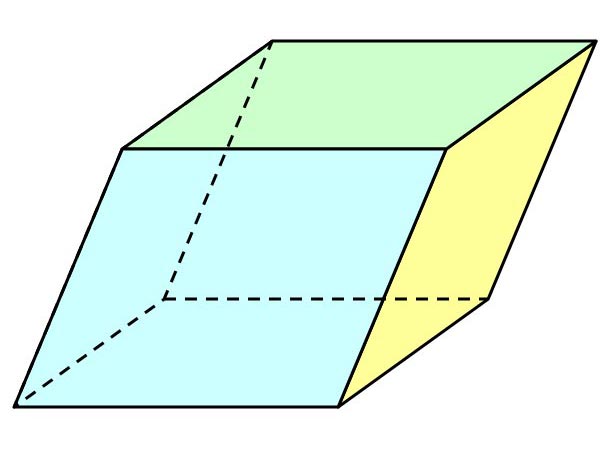

变换方式包括:全部放大、拉伸、平移(translation),但不包括旋转(rotation)、缩放(scaling)或扭曲(skew)。

参考资料:

Wolfram MathWorld: Linear Transformation

What is the difference between matrix theory and linear algebra? [closed]

矩阵理论与线性代数有什么区别?

Hi,

Currently, I’m taking matrix theory, and our textbook is Strang’s Linear Algebra.

目前,我正在学习矩阵理论,我们使用的教材是 Strang 的《线性代数》。

Besides matrix theory, which all engineers must take, there exists linear algebra I and II for math majors.

除了工程师必修的矩阵理论外,还有面向数学专业的线性代数 I 和 II。

What is the difference, if any, between matrix theory and linear algebra?

矩阵理论和线性代数之间有什么区别吗?

Thanks! asked Jan 13, 2010 at 17:17 kolistivra

Likely the version of the course called “linear algebra” is proof - based and gets deeper into the conceptual content, whereas “matrix theory” probably focuses on applications.

很可能名为“线性代数”的课程更注重证明,并深入探讨概念性内容,而“矩阵理论”可能更侧重于应用。

It’s a matter of emphasis, really.

这其实只是侧重点不同而已。

– Qiaochu Yuan, Commented Jan 13, 2010 at 17:20

The other difference I’ve seen is that matrix theory usually concentrates on the theory of real complex matrices.

我看到的另一个区别是,矩阵理论通常专注于实复矩阵的理论。

Linear algebra cares about those, but also rational canonical forms, etc…

线性代数也关心这些,但还涉及有理标准形等内容……

– Pace Nielsen, Commented Apr 1, 2010 at 14:52

Answers

Let me elaborate a little on what Steve Huntsman is talking about.

让我来详细解释一下 Steve Huntsman 所说的内容。

A matrix is just a list of numbers, and you’re allowed to add and multiply matrices by combining those numbers in a certain way.

矩阵只是一个数字列表,你可以通过以某种方式组合这些数字来对矩阵进行加法和乘法运算。

When you talk about matrices, you’re allowed to talk about things like the entry in the 3rd row and 4th column, and so forth.

当你谈论矩阵时,你可以谈论像第 3 行第 4 列的元素之类的内容。

In this setting, matrices are useful for representing things like transition probabilities in a Markov chain, where each entry indicates the probability of transitioning from one state to another.

在这种情况下,矩阵可用于表示马尔可夫链中的转移概率,其中每个元素表示从一个状态转移到另一个状态的概率。

You can do lots of interesting numerical things with matrices, and these interesting numerical things are very important because matrices show up a lot in engineering and the sciences.

你可以用矩阵做很多有趣的数值运算,这些运算非常重要,因为矩阵在工程和科学中经常出现。

In linear algebra, however, you instead talk about linear transformations, which are not (I cannot emphasize this enough) a list of numbers, although sometimes it is convenient to use a particular matrix to write down a linear transformation.

然而,在线性代数中,你讨论的是线性变换,这不是(我再怎么强调都不为过)一个数字列表,尽管有时使用特定的矩阵来表示线性变换会很方便。

The difference between a linear transformation and a matrix is not easy to grasp the first time you see it, and most people would be fine with conflating the two points of view.

当你第一次看到时,线性变换和矩阵之间的区别并不容易理解,大多数人会将这两种观点混为一谈。

However, when you’re given a linear transformation, you’re not allowed to ask for things like the entry in its 3rd row and 4th column because questions like these depend on a choice of basis.

然而,当你被给定一个线性变换时,你不能询问像第 3 行第 4 列的元素这样的问题,因为这些问题取决于基的选择。

Instead, you’re only allowed to ask for things that don’t depend on the basis, such as the rank, the trace, the determinant, or the set of eigenvalues.

相反,你只能询问那些不依赖于基的内容,例如秩、迹、行列式或特征值集合。

This point of view may seem unnecessarily restrictive, but it is fundamental to a deeper understanding of pure mathematics.

这种观点可能看起来有些过于严格,但它对于深入理解纯数学至关重要。

answered Jan 13, 2010 at 18:29 Qiaochu Yuan

While it is true that people doing “Matrix Theory” often spend a lot of time with a choice of basis, it’s important to note that this is frequently in pursuit of quantities that are invariant of choice of basis.

虽然从事“矩阵理论”的人经常花费大量时间选择基,但重要的是要注意,这通常是为追求与基无关的量。

– Dan Piponi, Commented Jan 13, 2010 at 23:44

An even more basic question but in the same line is “What is the difference of a vector and a row (collum) matrix”.

一个更基本但类似的问题是:“向量和行(列)矩阵有什么区别?”

Vectors are mathematical objects living in a linear space or vector space (which satisfy certain properties).

向量是生活在线性空间或向量空间(满足某些性质)中的数学对象。

Choosing a special set of vectors called a base, we can decompose every vector in the vector space into a kind of sum of vectors in this base.

选择一组特殊的向量称为基,我们可以将向量空间中的每个向量分解为这个基中向量的某种和。

Thus every vector in a code, and this is the row (collum) matrix.

因此,每个向量都可以用代码表示,这就是行(列)矩阵。

The next step is to look at the homomorphisms (maps) between linear spaces.

下一步是研究线性空间之间的同态(映射)。

Choosing the base of the domain and the range we can represent the homomorphism by a matrix.

选择定义域和值域的基,我们可以用矩阵表示同态。

– abcdxyz, Commented Jan 14, 2010 at 15:00

Even worse, matrices depend on a choice of an ordered basis.

更糟糕的是,矩阵依赖于有序基的选择。

– Harry Gindi, Commented Mar 30, 2010 at 22:30

Belated comment: Depends on what you call a matrix, Harry.

迟到的评论:这取决于你如何定义矩阵,哈利。

If X X X and Y Y Y are sets and K [ X ] K[X] K[X] and K [ Y ] K[Y] K[Y] are their free K K K-vector spaces, then a linear map: K [ X ] → K [ Y ] K[X] \to K[Y] K[X]→K[Y] is the same as a map of sets X → K Y = X × Y → K X \to K^Y = X \times Y \to K X→KY=X×Y→K.

如果 X X X 和 Y Y Y 是集合, K [ X ] K[X] K[X] 和 K [ Y ] K[Y] K[Y] 是它们的自由 K K K-向量空间,那么线性映射: K [ X ] → K [ Y ] K[X] \to K[Y] K[X]→K[Y] 与集合映射 X → K Y = X × Y → K X \to K^Y = X \times Y \to K X→KY=X×Y→K 是相同的。

I’d argue this is what a matrix really is and that ordering is an artifact of trying to write something in linear order on a piece of paper.

我认为这才是矩阵的真正含义,而排序只是试图在纸上以线性顺序书写的内容。

– Per Vognsen, Commented Aug 4, 2010 at 5:36

A counter - quotation to the one from Dieudonné:

与迪厄多内的引文相对应的另一条引文:

We share a philosophy about linear algebra: we think basis - free, we write basis - free, but when the chips are down we close the office door and compute with matrices like fury.

我们对线性代数有一个共同的哲学观点:我们思考时不受基的限制,写作时也不受基的限制,但当真正需要解决问题时,我们会关上门,像疯了一样用矩阵进行计算。

(Irving Kaplansky, writing of himself and Paul Halmos)

(欧文·卡普兰斯基,谈到他自己和保罗·哈尔莫斯)

answered Mar 31, 2010 at 8:06 John Stillwell

I totally agree with this one. Thanks for sharing.

我完全同意这个观点。谢谢你的分享。

– user1855, Commented Apr 2, 2010 at 2:27

+1! I confess that I like this quote much more than the other one (Dieudonné’s), which, at least to me, appears a little arrogant.

+1!我承认,我更喜欢这条引文,而不是迪厄多内的那条,至少在我看来,迪厄多内的那条显得有些傲慢。

In my opinion, “abstract” is not automatically “better.” There are cases when one needs a concrete and efficient computation [Or, are all the algorithms implemented in Matlab just not smart enough because they use matrices?]

在我看来,“抽象”并不一定“更好”。有些情况下,人们需要具体且高效的计算 [或者,Matlab 中实现的所有算法仅仅因为使用了矩阵就不够聪明了吗?]

– user2734, Commented Apr 2, 2010 at 7:48

To echo the comment above: Kaplansky’s quotation is that much more appropriate for people who code in low - level or numerical languages.

呼应上面的评论:卡普兰斯基的引文更适合那些使用低级或数值语言进行编程的人。

It’s possible to do a heck of a lot of symbolic calculation in such settings through the judicious use of integral matrices (here “integral” should be considered broadly).

在这些环境中,通过合理使用积分矩阵(这里的“积分”应该广义理解),可以进行大量的符号计算。

– Steve Huntsman, Commented Apr 23, 2010 at 16:26

Let me quote without further comment from Dieudonné’s “Foundations of Modern Analysis, Vol. 1.”

让我引用迪厄多内的《现代分析基础,第 1 卷》,不作进一步评论。

There is hardly any theory which is more elementary [than linear algebra], in spite of the fact that generations of professors and textbook writers have obscured its simplicity by preposterous calculations with matrices.

几乎没有哪一种理论比线性代数更基础,尽管几代教授和教科书作者通过用矩阵进行荒谬的计算掩盖了它的简单性。

answered Mar 30, 2010 at 22:06 Konrad Waldorf

You should add this to the great quotes in mathematics thread.

你应该把这条引文添加到数学中的名言讨论中。

– Harry Gindi, Commented Mar 30, 2010 at 22:30

It is ironic that a textbook on analysis would make such an outrageous claim on the triviality of another field: the analytic parts of linear algebra are truly deep and quite actively researched.

一本分析学的教科书竟然对另一个领域的简单性做出如此荒谬的断言,这真是讽刺:线性代数的分析部分确实非常深刻,且研究非常活跃。

See, for example, Loewner’s classification of matrix - monotone functions, or most any paper in quantum Shannon theory.

例如,可以参考 Loewner 对矩阵单调函数的分类,或者量子香农理论中的大多数论文。

Additionally, the entire field of quantum information theory (QIT) is essentially the study of unitary and self - adjoint operators on tensor products of Hilbert spaces, and a large majority the interesting questions in QIT retain 99% of their interest in the finite - dimensional case.

此外,整个量子信息理论(QIT)本质上是研究希尔伯特空间张量积上的酉算子和自伴算子,而 QIT 中大多数有趣的问题在有限维情况下仍然保留了 99% 的兴趣。

– Jon, Commented Apr 4, 2010 at 18:06

I don’t think that there are many who can claim to have a better understanding of that things than Dieudonné.

我认为,没有多少人能声称比迪厄多内更理解这些事情。

So instead of trying so hard to misunderstand him, try to find a meaning in his comment.

所以,与其这么努力地误解他,不如试着理解他的评论。

– Tile, Commented Apr 4, 2010 at 18:53

It should also be pointed out that the “analytic parts of linear algebra” are more properly thought of as linear analysis, or in the case of operator monotone functions and calculations with the c.b. norm, even as non - linear analysis.

还应该指出,“线性代数的分析部分”更应该被视为线性分析,或者在涉及算子单调函数和 c.b. 范数计算的情况下,甚至可以被视为非线性分析。

I think castigating Dieudonné for this quote is taking unnecessary umbrage.

我认为,因为这条引文而斥责迪厄多内是不必要的。

– Yemon Choi, Commented Apr 4, 2010 at 19:25

Of course Dieudonne meant “elementary” as in “simple and foundational”, here not using “simple” to mean easy, but simple in the sense of structural complexity.

当然,迪厄多内所说的“基础”是指“简单且基础”,这里的“简单”并不是指容易,而是指结构复杂性较低。

It’s not an arrogant statement about how easy he thinks linear algebra is, but rather a castigation of those “generations of professors and textbook writers” who turned an elegant subject into a jumbled mess.

这并不是他对线性代数简单性的傲慢评价,而是对那些将一个优雅的学科变成一团乱麻的“几代教授和教科书作者”的批评。

– Harry Gindi, Commented Jun 10, 2010 at 8:45

The difference is that in matrix theory you have chosen a particular basis.

在矩阵理论中,你选择了一个特定的基。

answered Jan 13, 2010 at 17:23 Steve Huntsman

Although some years ago I would have agreed with the above comments about the relationship between Linear Algebra and Matrix Theory, I DO NOT agree any more!

尽管几年前我同意上述关于线性代数与矩阵理论关系的评论,但现在我再也不同意了!

See, for example Bhatia’s “Matrix Analysis” GTM book. For example, doubly - (sub) stochastic matrices arise naturally in the classification of unitarily - invariant norms.

例如,参见 Bhatia 的《矩阵分析》GTM 书籍。例如,双(次)随机矩阵自然出现在酉不变范数的分类中。

They also naturally appear in the study of quantum entanglement, which really has nothing to do with a basis.

它们也自然出现在量子纠缠的研究中,而这与基无关。

(In both instances, all sorts of NON - arbitrary bases come into play, mainly after the spectral theorem gets applied.)

(在这两种情况下,各种非任意基都会被用到,主要是在应用谱定理之后。)

Doubly - stochastic matrices turn out to be useful to give concise proofs of basis - independent inequalities, such as the non - commutative Holder inequality:

双随机矩阵被证明在证明与基无关的不等式(如非交换赫尔德不等式)时非常有用:

tr ∣ A B ∣ ≤ ∥ A ∥ _ p ∥ B ∥ _ q \text{tr} \ |AB| \leq \|A\|\_p \|B\|\_q tr ∣AB∣≤∥A∥_p∥B∥_q

with 其中 1 p + 1 q = 1 \frac{1}{p} + \frac{1}{q} = 1 p1+q1=1, ∣ A ∣ = ( A ∗ A ) 1 / 2 |A| = (A^* A)^{1/2} ∣A∣=(A∗A)1/2, and 且 ∥ A ∥ _ p = ( tr ∣ A ∣ p ) 1 / p \|A\|\_p = (\text{tr} \ |A|^p)^{1/p} ∥A∥_p=(tr ∣A∣p)1/p.

edited Apr 4, 2010 at 18:10, answered Apr 1, 2010 at 21:08 Jon

Doubly - stochastic matrices (in one interpretation, anyway) describe transition probabilities of some Markov chain where all the transitions are reversible.

(至少在一种解释中)双随机矩阵描述了某些马尔可夫链的转移概率,其中所有转移都是可逆的。

The relevant vector space is the free vector space over the states of the chain.

相关的向量空间是链状态上的自由向量空间。

Maybe this interpretation isn’t directly relevant to the application you’re thinking of, but there should be some connection.

也许这种解释与你想的应用并不直接相关,但应该有一些联系。

– Qiaochu Yuan, Commented Apr 4, 2010 at 18:25

In the application to the Holder inequality, one uses the fact that if U U U is a unitary operator, then replacing the matrix elements of U U U by the squares of their absolute values yields a doubly - stochastic matrix.

在应用于赫尔德不等式时,利用了这样一个事实:如果 U U U 是一个酉算子,那么将 U U U 的矩阵元素替换为其绝对值的平方,会得到一个双随机矩阵。

– Jon, Commented Apr 7, 2010 at 1:33

Matrix theory is the specialization of linear algebra to the case of finite - dimensional vector spaces and doing explicit manipulations after fixing a basis.

矩阵理论是线性代数在有限维向量空间情况下的特化,并在固定基后进行显式操作。

More precisely: The algebra of n × n n \times n n×n matrices with coefficients in a field F F F is isomorphic to the algebra of F F F - linear homomorphisms from an n n n - dimensional vector space V V V over F F F, to itself.

更准确地说:系数在域 F F F 中的 n × n n \times n n×n 矩阵代数与从 F F F 上的 n n n 维向量空间 V V V 到自身的 F F F - 线性同态代数是同构的。

And the choice of such an isomorphism is precisely the choice of a basis for V V V.

而这种同构的选择正是 V V V 的基的选择。

Sometimes you need concrete computations for which you use the matrix viewpoint.

有时你需要具体的计算,这时你会使用矩阵的观点。

But for conceptual understanding, application to wider contexts and for overall mathematical elegance, the abstract approach of vector spaces and linear transformations is better.

但对于概念理解、更广泛的应用以及整体的数学优雅性来说,向量空间和线性变换的抽象方法更好。

In this second approach you can take over linear algebra to more general settings such as modules over rings (PIDs for instance), functional analysis, homological algebra, representation theory, etc…

在第二种方法中,你可以将线性代数推广到更一般的情境中,例如环上的模(例如主理想整环)、泛函分析、同调代数、表示论等。

All these topics have linear algebra at their heart, or, rather, “is” indeed linear algebra.

所有这些主题的核心都是线性代数,或者更准确地说,它们“就是”线性代数。

edited Mar 30, 2010 at 21:57, answered Jan 24, 2010 at 14:00 Anweshi

I’m with Jon. Matrices don’t always appear as linear transformations.

我同意乔恩的观点。矩阵并不总是以线性变换的形式出现。

Yes, you can look at them as linear transformations, but there are times when it’s better not to and study them for their own right.

当然,你可以将它们视为线性变换,但有时最好不要这样,而是直接研究它们本身。

Jon already gave one example. Another example is the theory of positive (semi) - definite matrices.

乔恩已经举了一个例子。另一个例子是正(半)定矩阵的理论。

They appear naturally as covariance matrices of random vectors.

它们自然地作为随机向量的协方差矩阵出现。

The notions like Schur complements appear naturally in a course in matrix theory, but probably not in linear algebra.

像舒尔补这样的概念自然地出现在矩阵理论课程中,但可能不会出现在线性代数课程中。

answered Apr 2, 2010 at 2:24 user1855

Covariance matrices are essentially inner products, aren’t they?

协方差矩阵本质上是内积,不是吗?

That’s just thinking of matrices as tensors of type (0, 2) instead of as tensors of type (1, 1).

这只不过是将矩阵视为 (0, 2) 型张量,而不是 (1, 1) 型张量。

I think the theory of linear algebra is really good at clarifying the distinction between this type of matrix and the “usual” type of matrix; for example it gets to the heart of when similarity is relevant vs. when conjugation is relevant.

我认为线性代数理论非常擅长澄清这种矩阵与“通常”矩阵之间的区别;例如,它抓住了相似性何时重要,而共轭何时重要的核心。

So I don’t think this is a good example.

所以我不认为这是一个好的例子。

– Qiaochu Yuan, Commented Apr 4, 2010 at 18:20

Matrix multiplication: interpreting and understanding the process

矩阵乘法:解释与理解其过程

I have just watched the first half of the 3rd lecture of Gilbert Strang on the open course ware with link: https://ocw.mit.edu/courses/mathematics/18-06-linear-algebra-spring-2010/video-lectures/

我刚看了 Gilbert Strang 在开放课程网站上第 3 讲的前半部分,链接为:https://ocw.mit.edu/courses/mathematics/18-06-linear-algebra-spring-2010/video-lectures/

interpretations from mechanics of overlpaing forces come to mind immediately because that is the source for the dot product (inner product). It seems that with a matrix multiplication A B = C A B=C AB=C , that the entries as scalars, are formed from the dot product computations of the rows of A with the columns of B .

力学中关于力的叠加的解释立刻浮现在脑海中,因为这正是点积(内积)的来源。似乎在矩阵乘法 A B = C A B=C AB=C 中,作为标量的元素是由 A 的行与 B 的列的点积计算得到的。

Visual I see the rows of C as being the dot product of the rows of B , with the dot product of a particular row of A . Similar to the above and it is easy to see this from the individual entries in the matrix C as to which elements change to give which dot products.

从直观上看,我认为 C 的行是 B 的行与 A 的特定行的点积。与上述情况类似,从矩阵 C 中各个元素的变化可以很容易看出它们对应哪些点积。

For understanding matrix multiplication there is the geometrical interpretation, that the transormation operator for rotation, scalling, reflection and skew. It is easy to see this by argument and is strongly convincing of its generality. This interpreation is strong but not smooth because I would find smoother an explanation which would be an interpretation begining from the dot product of vectors and using this to explain the process and the interpretation of the results (one which is a bit easier to see without many examples of the putting numbers in and seeing what comes out which students go through).

为理解矩阵乘法,存在一种几何解释,即它是用于旋转、缩放、反射和切变的变换算子。通过论证很容易理解这一点,且其一般性极具说服力。这种解释很有力度,但不够自然,因为我认为更自然的解释应该从向量的点积入手,并用它来解释矩阵乘法的过程和结果(这种解释无需学生代入大量数字计算并观察结果,更容易理解)。

matrix multiplication is a change in the reference system since matrix B can be seen as a constructing example B matrices with these effects on A . This decomposition is a strong

矩阵乘法是参考系的一种变化,因为矩阵 B 可以被视为一个构造示例,即 B 矩阵对 A 具有这些作用。这种分解具有很强的说服力。

I can hope that sticking to dot products throughout the explanation and THEN seeing how these can be seen to produce scalings, rotations, and skewings would be better. But, after some simple graphical examples I saw this doesn’t work as the order of the columns in matrix B are important and don’t show in the graphical representation.

我希望在整个解释过程中始终围绕点积,然后再看它们如何产生缩放、旋转和切变效果,这样会更好。但在看了一些简单的图形示例后,我发现这行不通,因为矩阵 B 中列的顺序很重要,而这在图形表示中并未体现。

(explains why this approach preserves the “composition of linear transformations”; thanks @Arturo Magidin). So the question is: The best explanation I can find is at . It is convincing but a bit disappointing Yahoo Answers Why does matrix multiplication happen as it does, and are there good practical examples to support it? Preferably not via rotations/scalings/skews (thanks @lhf).

(解释了为什么这种方法能保持“线性变换的复合”;感谢 @Arturo Magidin)。所以问题是:我能找到的最佳解释在雅虎问答上。它很有说服力,但有点令人失望。为什么矩阵乘法是这样定义的?有没有好的实际例子来支持这种定义?最好不是通过旋转/缩放/切变(感谢 @lhf)。

edited Feb 27, 2015 at 19:28, epimorphic

asked Mar 1, 2011 at 18:51, Vass

Matrix multiplication is defined the way it does because it then coincides with composition of linear transformations. There are other matrix multiplications (e.g., the Kronecker product, which is entryby-entry), but the great advantage of the “usual” matrix multiplication is that it corresponds to composition of linear transformations. – Arturo Magidin Mar 1, 2011 at 19:09

矩阵乘法之所以这样定义,是因为它与线性变换的复合一致。存在其他矩阵乘法(例如,克罗内克积,它是按元素逐个进行的),但“常规”矩阵乘法的巨大优势在于它与线性变换的复合相对应。

Matrix multiplication doesn’t “happen”, it is a certain way. it is defined that way is defined Why so that it corresponds to compositions of linear transformations: nothing more and nothing precisely else. For “practical examples”, write out linear transformations in terms of the basis, write out what the composition is, and you’ll see it corresponds to matrix multiplication. There is nothing to exactly “support”, the definition was made with one particular purpose in mind, and it achieves that purpose, period. – Arturo Magidin Mar 1, 2011 at 19:26

矩阵乘法不是“自然发生”的,它是被定义成某种形式的。它之所以这样定义,是为了与线性变换的复合相对应,仅此而已,不多也不少。至于“实际例子”,用基来写出线性变换,写出复合变换是什么,你就会发现它与矩阵乘法相对应。没有什么东西能确切地“支持”它,这个定义的提出只有一个特定目的,而它达到了这个目的,就这样。

Supporting Arturo: Matrices are just used to visualise linear transformations, in in this manner the composition of two linear transformations L 1 L_{1} L1 and L 2 L_{2} L2 is a new linear transformation L 3 = L 1 ∘ L 2 L_{3}=L_{1} \circ L_{2} L3=L1∘L2 which when you write it out as matrices is the product of the matrices. – AD - Stop Putin - Mar 1, 2011 at 19:45

Arturo 的观点:矩阵只是用于可视化线性变换,通过这种方式,两个线性变换 L 1 L_{1} L1 和 L 2 L_{2} L2 的复合是一个新的线性变换 L 3 = L 1 ∘ L 2 L_{3}=L_{1} \circ L_{2} L3=L1∘L2,当用矩阵表示时,这个复合变换就是两个矩阵的乘积。

I feel like this is then a question about history: why did (= what historical motivations) matrix multiplication get defined like it is? Did the dot product (which is itself magical in that it gives a projection) come first? – Mitch Mar 1, 2011 at 21:11

我觉得这其实是一个关于历史的问题:矩阵乘法为什么会被定义成这样(即历史动机是什么)?是点积(它本身很奇妙,因为能给出投影)先出现的吗?

Answers

Some comments first.There are several serious confusions in what you write. For Some comments first. example, in the third paragraph, having seen that the entries of A B A B AB are obtained by taking the dot product of the corresponding row of A with column of B , you write that you view A B A B AB as a dot product of of rows B and rows of A . It’s not.

先来点评论。你所写的内容中存在一些严重的混淆。首先做一些评论。例如,在第三段中,你已经了解到 A B A B AB 的元素是通过 A 的相应行与 B 的列的点积得到的,但你却写道你将 A B A B AB 视为 B 的行与 A 的行的点积。事实并非如此。

For another example, you talk about matrix multiplication “happening”. Matrices aren’t running wild in the hidden jungles of the Amazon, where things “happen” without human defined the way it is defined, and whether there are other ways of defining a “multiplication” on matrices (yes, there are; read further), but that’s a completely separate question. “Why does matrix multiplication happen the way it does?” is pretty incoherent on its face. beings. Matrix multiplication is a certain way, and then the definition is why matrix defined multiplication is done the way it is done. You may very well ask matrix multiplication is why

再举一个例子,你谈到矩阵乘法“发生”。矩阵不会像亚马逊隐秘丛林中不受人类干预而“自然发生”的事物那样自行其是。矩阵乘法是被定义成某种形式的,你完全可以问矩阵乘法为什么会被定义成这样,以及是否有其他定义矩阵“乘法”的方式(是的,有;继续往下读),但这是一个完全不同的问题。“为什么矩阵乘法会以这种方式发生?”这个问题本身在表面上就相当不连贯。

Another example of confusion is that not every matrix corresponds to a “change in reference system”. This is only true, viewed from the correct angle, for matrices. invertible

另一个混淆的例子是,并非每个矩阵都对应着“参考系的变化”。从正确的角度来看,这只对可逆矩阵成立。

Standard matrix multiplication.

标准矩阵乘法。

Matrix multiplication is defined the way it is because it corresponds to composition of linear transformations. Though this is valid in extremely great generality, let’s focus on linear transformations T : R n → R m T: \mathbb{R}^{n} \to \mathbb{R}^{m} T:Rn→Rm . Since linear transformations satisfy T ( α x + β y ) = α T ( x ) + β T ( y ) T(\alpha x+\beta y)=\alpha T(x)+\beta T(y) T(αx+βy)=αT(x)+βT(y) , if you know the value of T at each of e 1 , . . . , e n e_{1}, ..., e_{n} e1,...,en , where e i n e_{i}^{n} ein is the (column) n -vector that has Os in each coordinate except the ith coordinate where it has a , then you know the value of 1 T at every single vector of R n \mathbb{R}^{n} Rn

矩阵乘法之所以这样定义,是因为它与线性变换的复合相对应。尽管这在极其广泛的范围内都是有效的,但让我们聚焦于线性变换 T : R n → R m T: \mathbb{R}^{n} \to \mathbb{R}^{m} T:Rn→Rm 。由于线性变换满足 T ( α x + β y ) = α T ( x ) + β T ( y ) T(\alpha x+\beta y)=\alpha T(x)+\beta T(y) T(αx+βy)=αT(x)+βT(y) ,如果你知道 T 在 e 1 , … , e n e_{1}, \ldots, e_{n} e1,…,en 中每个向量上的值(其中 e i n e_{i}^{n} ein 是 n 维列向量,除了第 i 个坐标为 1 外,其余每个坐标都为 0),那么你就知道 T 在 R n \mathbb{R}^{n} Rn 中每个向量上的值。can take So in order to describe the value of T , I just need to tell you what T ( e i ) T(e_{i}) T(ei) is. For example, we

因此,为了描述 T 的值,我只需要告诉你 T ( e i ) T(e_{i}) T(ei) 是什么。例如,我们可以取T ( e i ) = ( a 1 i a 2 i ⋮ a m i ) . T\left(e_{i}\right)=\left(\begin{array}{c} a_{1 i} \\ a_{2 i} \\ \vdots \\ a_{m i} \end{array}\right) . T(ei)= a1ia2i⋮ami .

Then, since

那么,因为( k 1 k 2 ⋮ k n ) = k 1 e 1 + ⋯ + k n e n , \left(\begin{array}{c} k_{1} \\ k_{2} \\ \vdots \\ k_{n} \end{array}\right)=k_{1} e_{1}+\cdots+k_{n} e_{n}, k1k2⋮kn =k1e1+⋯+knen,

we have

我们有

T ( k 1 k 2 ⋮ k n ) = k 1 T ( e 1 ) + ⋯ + k n T ( e n ) = k 1 ( a 11 a 21 ⋮ a m 1 ) + ⋯ + k n ( a 1 n a 2 n ⋮ a m n ) . \begin{align*} T\left( \begin{matrix} {{k}_{1}} \\ {{k}_{2}} \\ \vdots \\ {{k}_{n}} \\ \end{matrix} \right) & ={{k}_{1}}T({{\mathbf{e}}_{1}})+\cdots +{{k}_{n}}T({{\mathbf{e}}_{n}}) \\ & ={{k}_{1}}\left( \begin{matrix} {{a}_{11}} \\ {{a}_{21}} \\ \vdots \\ {{a}_{m1}} \\ \end{matrix} \right)+\cdots +{{k}_{n}}\left( \begin{matrix} {{a}_{1n}} \\ {{a}_{2n}} \\ \vdots \\ {{a}_{mn}} \\ \end{matrix} \right). \end{align*} T k1k2⋮kn =k1T(e1)+⋯+knT(en)=k1 a11a21⋮am1 +⋯+kn a1na2n⋮amn .It is very fruitful, then to keep track of the a i j a_{i j} aij in some way, and given the expression above, we think of T as being “given” by the matrix .

因此,以某种方式记录 a i j a_{ij} aij 是非常有益的,并且根据上面的表达式,我们认为 T 是由以下矩阵“给出”的:( a 11 a 12 ⋯ a 1 n a 21 a 22 ⋯ a 2 n ⋮ ⋮ ⋱ ⋮ a m 1 a m 2 ⋯ a m n ) . \left(\begin{array}{cccc} a_{11} & a_{12} & \cdots & a_{1 n} \\ a_{21} & a_{22} & \cdots & a_{2 n} \\ \vdots & \vdots & \ddots & \vdots \\ a_{m 1} & a_{m 2} & \cdots & a_{m n} \end{array}\right) . a11a21⋮am1a12a22⋮am2⋯⋯⋱⋯a1na2n⋮amn .

means “transpose”; turn every rown into a column, every column into a row), then we have If we want to keep track of T this way, then for an arbitrary vector x = ( x 1 , . . . , x n ) t x=(x_{1}, ..., x_{n})^{t} x=(x1,...,xn)t (the t that T ( x ) T(x) T(x) corresponds to:

如果我们想以这种方式记录 T,那么对于任意向量 x = ( x 1 , … , x n ) t x=(x_{1}, \ldots, x_{n})^{t} x=(x1,…,xn)t(其中 t 表示“转置”,即把每一行变成一列,每一列变成一行),则 T ( x ) T(x) T(x) 对应于:( a 11 a 12 ⋯ a 1 n a 21 a 22 ⋯ a 2 n ⋮ ⋮ ⋱ ⋮ a m 1 a m 2 ⋯ a m n ) ( x 1 x 2 ⋮ x n ) = ( a 11 x 1 + a 12 x 2 + ⋯ + a 1 n x n a 21 x 1 + a 22 x 2 + ⋯ + a 2 n x n ⋮ a m 1 x 1 + a m 2 x 2 + ⋯ + a m n x n ) . \left(\begin{array}{cccc} a_{11} & a_{12} & \cdots & a_{1 n} \\ a_{21} & a_{22} & \cdots & a_{2 n} \\ \vdots & \vdots & \ddots & \vdots \\ a_{m 1} & a_{m 2} & \cdots & a_{m n} \end{array}\right)\left(\begin{array}{c} x_{1} \\ x_{2} \\ \vdots \\ x_{n} \end{array}\right)=\left(\begin{array}{c} a_{11} x_{1}+a_{12} x_{2}+\cdots+a_{1 n} x_{n} \\ a_{21} x_{1}+a_{22} x_{2}+\cdots+a_{2 n} x_{n} \\ \vdots \\ a_{m 1} x_{1}+a_{m 2} x_{2}+\cdots+a_{m n} x_{n} \end{array}\right) . a11a21⋮am1a12a22⋮am2⋯⋯⋱⋯a1na2n⋮amn x1x2⋮xn = a11x1+a12x2+⋯+a1nxna21x1+a22x2+⋯+a2nxn⋮am1x1+am2x2+⋯+amnxn .

What happens when we have linear transformations, two T : R n → R m T: \mathbb{R}^{n} \to \mathbb{R}^{m} T:Rn→Rm and S : R p → R n ? S: \mathbb{R}^{p} \to \mathbb{R}^{n} ? S:Rp→Rn? If T corresponds as above to a certain m × n m ×n m×n matri,then S will ikevis corespond to a certain n × p n ×p n×p matrix, say .

当我们有两个线性变换 T : R n → R m T: \mathbb{R}^{n} \to \mathbb{R}^{m} T:Rn→Rm 和 S : R p → R n S: \mathbb{R}^{p} \to \mathbb{R}^{n} S:Rp→Rn 时,会发生什么呢?如果 T 如上述对应于某个 m × n m×n m×n 矩阵,那么 S 同样会对应于某个 n × p n×p n×p 矩阵,例如:( b 11 b 12 ⋯ b 1 p b 21 b 22 ⋯ b 2 p ⋮ ⋮ ⋱ ⋮ b n 1 b n 2 ⋯ b n p ) . \left(\begin{array}{cccc} b_{11} & b_{12} & \cdots & b_{1 p} \\ b_{21} & b_{22} & \cdots & b_{2 p} \\ \vdots & \vdots & \ddots & \vdots \\ b_{n 1} & b_{n 2} & \cdots & b_{n p} \end{array}\right) . b11b21⋮bn1b12b22⋮bn2⋯⋯⋱⋯b1pb2p⋮bnp .

that What is T ∘ S ? T \circ S ? T∘S? First, it is a linear transformation because composition of linear transformations yields a linear transformation. Second, it goes from R p \mathbb{R}^{p} Rp to R m \mathbb{R}^{m} Rm , so it should correspond to an m × p m ×p m×p matrix. Which matrix? If we let f 1 , . . . , f p f_{1}, ..., f_{p} f1,...,fp be the (column) p -vectors given by letting f j f_{j} fj have Os everywhere and a l in the j t h j th jth entry,then the matrix above tels s

T ∘ S T \circ S T∘S 是什么呢?首先,它是一个线性变换,因为线性变换的复合仍然是线性变换。其次,它从 R p \mathbb{R}^{p} Rp 映射到 R m \mathbb{R}^{m} Rm ,所以它应该对应于一个 m × p m×p m×p 矩阵。是哪个矩阵呢?如果我们令 f 1 , … , f p f_{1}, \ldots, f_{p} f1,…,fp 为 p 维列向量,其中 f j f_{j} fj 的所有元素都为 0,只有第 j 个元素为 1,那么上面的矩阵告诉我们:S ( f j ) = ( b 1 j b 2 j ⋮ b n j ) = b 1 j e 1 + ⋯ + b n j e n . S\left(f_{j}\right)=\left(\begin{array}{c} b_{1 j} \\ b_{2 j} \\ \vdots \\ b_{n j} \end{array}\right)=b_{1 j} e_{1}+\cdots+b_{n j} e_{n} . S(fj)= b1jb2j⋮bnj =b1je1+⋯+bnjen.

So, what is T ∘ S ( f j ) T \circ S(f_{j}) T∘S(fj) ? This is what goes in the j t h j th jth column of the matrix that coresponds to T ∘ S T \circ S T∘S . Evaluating, we have:

那么, T ∘ S ( f j ) T \circ S(f_{j}) T∘S(fj) 是什么呢?这就是对应于 T ∘ S T \circ S T∘S 的矩阵的第 j 列中的内容。计算可得:T ∘ S ( f j ) = T ( S ( f j ) ) = T ( b 1 j e 1 + ⋯ + b n j e n ) = b 1 j T ( e 1 ) + ⋯ + b n j T ( e n ) = b 1 j ( a 11 a 21 ⋮ a m 1 ) + ⋯ + b n j ( a 1 n a 2 n ⋮ a m n ) = ( a 11 b 1 j + a 12 b 2 j + ⋯ + a 1 n b n j a 21 b 1 j + a 22 b 2 j + ⋯ + a 2 n b n j ⋮ a m 1 b 1 j + a m 2 b 2 j + ⋯ + a m n b n j ) . \begin{align*} T\circ S(\mathbf{f}_j) &= T\Bigl( S(\mathbf{f}_j)\Bigr)\\\ &= T\Bigl( b_{1j}\mathbf{e}_1 + \cdots + b_{nj}\mathbf{e}_n\Bigr)\\\ &= b_{1j} T(\mathbf{e}_1) + \cdots + b_{nj}T(\mathbf{e}_n)\\\ &= b_{1j}\left(\begin{array}{c} a_{11}\\\ a_{21}\\\ \vdots\\\ a_{m1}\end{array}\right) + \cdots + b_{nj}\left(\begin{array}{c} a_{1n}\\a_{2n}\\\ \vdots\\\ a_{mn}\end{array}\right)\\\ &= \left(\begin{array}{c} a_{11}b_{1j} + a_{12}b_{2j} + \cdots + a_{1n}b_{nj}\\\ a_{21}b_{1j} + a_{22}b_{2j} + \cdots + a_{2n}b_{nj}\\\ \vdots\\\ a_{m1}b_{1j} + a_{m2}b_{2j} + \cdots + a_{mn}b_{nj} \end{array}\right). \end{align*} T∘S(fj) =T(S(fj))=T(b1je1+⋯+bnjen)=b1jT(e1)+⋯+bnjT(en)=b1j a11 a21 ⋮ am1 +⋯+bnj a1na2n ⋮ amn = a11b1j+a12b2j+⋯+a1nbnj a21b1j+a22b2j+⋯+a2nbnj ⋮ am1b1j+am2b2j+⋯+amnbnj .

be So if we want to write down the matrix that corresponds to T ∘ S T \circ S T∘S , then the ( i , j ) t h (i, j) th (i,j)th entry will

因此,如果我们想写出对应于 T ∘ S T \circ S T∘S 的矩阵,那么第 ( i , j ) (i,j) (i,j) 个元素将会是a i 1 b 1 j + a i 2 b 2 j + ⋯ + a i n b n j . a_{i 1} b_{1 j}+a_{i 2} b_{2 j}+\cdots+a_{i n} b_{n j} . ai1b1j+ai2b2j+⋯+ainbnj.

So we the “composition” or product of the matrix of define T with the matrix of S to be precisely the matrix of T ∘ S T \circ S T∘S . We can make this definition without reference to the linear transformations that gave it birth: if the matrix of T is m × n m ×n m×n with entries a i j a_{i j} aij (let’s call it A ); and the matrix of is S is n × p n ×p n×p with entries b r s b_{r s} brs (let’s call it B ), then the matrix of T ∘ S T \circ S T∘S (let’s call it A ∘ B A \circ B A∘B or A B A B AB ) is m × p m ×p m×p and with entries c k ℓ c_{k \ell} ckℓ , where

因此,我们把 T 的矩阵与 S 的矩阵的“复合”或乘积定义为恰好是 T ∘ S T \circ S T∘S 的矩阵。我们可以不参考产生这个定义的线性变换来给出这个定义:如果 T 的矩阵是 m × n m×n m×n 的,元素为 a i j a_{ij} aij(我们称之为 A);S 的矩阵是 n × p n×p n×p 的,元素为 b r s b_{rs} brs(我们称之为 B),那么 T ∘ S T \circ S T∘S 的矩阵(我们称之为 A ∘ B A \circ B A∘B 或 A B A B AB)是 m × p m×p m×p 的,元素为 c k ℓ c_{k\ell} ckℓ,其中c k ℓ = a k 1 b 1 ℓ + a k 2 b 2 ℓ + ⋯ + a k n b n ℓ c_{k \ell}=a_{k 1} b_{1 \ell}+a_{k 2} b_{2 \ell}+\cdots+a_{k n} b_{n \ell} ckℓ=ak1b1ℓ+ak2b2ℓ+⋯+aknbnℓ

the product of the matrices of the two functions. We can work with the matrices directly without having to think about the functions. . Why? Because then the matrix of the composition of two functions is by definition precisely

这就是两个函数的矩阵的乘积。我们可以直接对矩阵进行运算,而不必考虑函数。为什么呢?因为根据定义,两个函数的复合的矩阵恰好就是这两个函数的矩阵的乘积。

In point of fact, there is nothing about the dot product which is at play in this definition. It is you transpose this column can you try to interpret this as a dot product. (In fact, the modern more general inner product, called the Frobenius inner product, which is defined in terms of essentially by happenstance that the ( i , j ) (i, j) (i,j) entry can be obtained as a dot product of . In fact, the something ( i , j ) (i, j) (i,j) th entry is obtained as the of the matrix product 1 × n 1 ×n 1×n matrix consisting of the th row of i A , with the n × 1 n ×1 n×1 matrix consisting of the j t h jth jth column of B . Only if view is : we the dot product of two vectors as a special case of a the other way around define matrix multiplication, < x , y > = t r a c e ( y t ‾ x ) ) <x, y>=trace(\overline{y^{t}} x)) <x,y>=trace(ytx))

事实上,这个定义中并没有涉及点积的内容。 ( i , j ) (i,j) (i,j) 元素可以通过点积得到,这本质上是一种巧合。实际上,矩阵乘积的第 ( i , j ) (i,j) (i,j) 个元素是由 A 的第 i 行组成的 1 × n 1×n 1×n 矩阵与 B 的第 j 列组成的 n × 1 n×1 n×1 矩阵相乘得到的。只有当你把这个列向量转置后,才能尝试将其解释为点积。(事实上,现代更一般的内积,称为弗罗贝尼乌斯内积,其定义方式与此相反:我们将两个向量的点积定义为矩阵乘法的一个特例,即 < x , y > = t r a c e ( y t ‾ x ) <x, y>=trace(\overline{y^{t}} x) <x,y>=trace(ytx))

And because product of matrices corresponds to composition of linear transformations, all the product of matrices, composition of linear transformations distributes over sums of linear transformations (sums nice properties that composition of linear functions has will also be true for automatically because products of matrices is nothing more than a book-keeping . So device for keeping track of the composition of linear transformations ( A B ) C = A ( B C ) (A B) C=A(B C) (AB)C=A(BC) , because composition of functions is associative. A ( B + C ) = A B + A C A(B+C)=A B+A C A(B+C)=AB+AC because

并且由于矩阵的乘积对应于线性变换的复合,线性函数的复合所具有的所有良好性质也会自动适用于矩阵的乘积,因为矩阵的乘积只不过是记录线性变换复合的一种记账工具。因此, ( A B ) C = A ( B C ) (A B) C=A(B C) (AB)C=A(BC),因为函数的复合是可结合的; A ( B + C ) = A B + A C A(B+C)=A B+A C A(B+C)=AB+AC,因为线性变换的复合对线性变换的和满足分配律(矩阵的和是按元素定义的,这恰好与线性变换的和一致)。

transformations behaves that way with scalar multiplication (products of matrices by scalar transformations). of matrices are defined entry-by-entry because that agrees with the sum of linear precisely transformations). A ( α B ) = α ( A B ) = ( α A ) B A(\alpha B)=\alpha(A B)=(\alpha A) B A(αB)=α(AB)=(αA)B , because composition of linear are defined the way they are so that they will correspond to the operation with linear precisely

A ( α B ) = α ( A B ) = ( α A ) B A(\alpha B)=\alpha(A B)=(\alpha A) B A(αB)=α(AB)=(αA)B,因为线性变换的复合与数乘运算的作用方式就是如此(矩阵与标量的乘积的定义方式也恰好与线性变换的数乘相对应)。矩阵的各种运算之所以这样定义,正是为了与线性变换的运算相对应。

transformations. There really is no deeper hidden reason. It seems a bit incongruous, perhaps, that such a simple reason results in such a complicated formula, but such is life. So we product of matrices so that it will match up composition of linear define explicitly

真的没有更深层次的隐藏原因。也许,如此简单的原因导致了如此复杂的公式,这看起来有点不协调,但事实就是这样。因此,我们明确地定义矩阵的乘积,使其与线性变换的复合相匹配。

Another reason why it is somewhat misguided to try to understand matrix product in terms of the two compositions, but the dot product loses a lot of information about the two vectors in really tell you anything else. There is a lot of informational loss in the dot product, and trying to explain matrix product in terms of the dot product requires that we “recover” all of this lost information in some way. In practice, it means keeping track of all the original information, which makes trying to shoehorn the dot product into the explanation unnecessary, because dot product is that the matrix product keeps track of a l l all all the information lying around about question. Knowing that x ⋅ y = 0 x \cdot y=0 x⋅y=0 only tells you that x and y are perpendicular, it desn’t you will have all the information to get the product directly. already

另一个原因是,试图从点积的角度理解矩阵乘积在某种程度上是有误导性的。点积会丢失很多关于两个向量的信息,它只能告诉你两个向量的一些情况,而不能真正告诉你其他任何信息。在点积中存在大量的信息丢失,而试图用点积来解释矩阵乘积就需要我们以某种方式“恢复”所有这些丢失的信息。实际上,这意味着要记录所有的原始信息,这使得强行将点积纳入解释变得不必要,因为矩阵乘积已经记录了关于该问题的所有相关信息,你已经拥有了直接得到乘积所需的所有信息。例如,知道 x ⋅ y = 0 x \cdot y=0 x⋅y=0 只能告诉你 x 和 y 是垂直的,并不能告诉你其他信息。

transformation corresponds to a matrix. But the only linear transformations that can be is not a “change in reference system” (because lots of nonzero vectors go to zero, but there is no way to just “change your perspective” and start seeing a nonzero vector as zero) but is a Note that linear Examples that are not just “changes in reference system”. any thought of as “changes in perspective” are the linear transformations that map R n \mathbb{R}^{n} Rn to itself, and which are one-to-one and onto. There are of linear transformations that aren’t like lots that. For example, the linear transformation T from R 3 \mathbb{R}^{3} R3 to R 2 \mathbb{R}^{2} R2 defined by

请注意,线性变换对应于矩阵,但只有那些将 R n \mathbb{R}^{n} Rn 映射到自身且是一一对应和满射的线性变换才能被视为“视角的变化”。有很多线性变换并非如此。例如,从 R 3 \mathbb{R}^{3} R3 到 R 2 \mathbb{R}^{2} R2 的线性变换 T 定义为:

T ( a b c ) = ( b 2 c ) T\left(\begin{array}{l} a \\ b \\ c \end{array}\right)=\left(\begin{array}{c} b \\ 2 c \end{array}\right) T abc =(b2c)

这个线性变换并不是“参考系的变化”(因为很多非零向量会映射到零向量,但你不可能仅仅通过“改变视角”就将非零向量视为零向量),但它仍然是一个线性变换。其对应的矩阵是 2 × 3 2×3 2×3 的:T ( a b c ) = ( b 2 c ) T\left(\begin{array}{l} a \\ b \\ c \end{array}\right)=\left(\begin{array}{c} b \\ 2 c \end{array}\right) T abc =(b2c)

linear transformation nonetheless. The corresponding matrix is , and is 2 × 3

( 0 1 0 0 0 2 ) . \left( \begin{array}{lll} 0 & 1 & 0 \\ 0 & 0 & 2 \end{array} \right) . (001002).Now consider the linear transformation U : R 2 → R 2 U: \mathbb{R}^{2} \to \mathbb{R}^{2} U:R2→R2 given by

现在考虑由下式给出的线性变换 U : R 2 → R 2 U: \mathbb{R}^{2} \to \mathbb{R}^{2} U:R2→R2:

U ( x y ) = ( 3 x + 2 y 9 x + 6 y ) . U\left(\begin{array}{l} x \\ y \end{array}\right)=\left(\begin{array}{l} 3 x+2 y \\ 9 x+6 y \end{array}\right) . U(xy)=(3x+2y9x+6y).Again, this is not a “change in perspective”, because the vector ( 2 − 3 ) \binom{2}{-3} (−32) is mapped to ( 0 0 ) \binom{0}{0} (00) . It has amatrix, , which is 2 × 2 . ( 3 2 9 6 ) . \left(\begin{array}{cc} 3 & 2\\ 9 & 6 \end{array}\right). (3926).

同样,这也不是“视角的变化”,因为向量 ( 2 − 3 ) \begin{pmatrix} 2 \\ -3 \end{pmatrix} (2−3) 被映射到 ( 0 0 ) \begin{pmatrix} 0 \\ 0 \end{pmatrix} (00)。它对应的矩阵是 2 × 2 2×2 2×2 的: ( 3 2 9 6 ) \begin{pmatrix} 3 & 2 \\ 9 & 6 \end{pmatrix} (3926)。

So the composition U ∘ T U \circ T U∘T has matrix:

因此,复合变换 U ∘ T U \circ T U∘T 的矩阵为:( 3 2 9 6 ) ( 0 1 0 0 0 2 ) = ( 0 3 4 0 9 12 ) , \left(\begin{array}{ll} 3 & 2 \\ 9 & 6 \end{array}\right)\left(\begin{array}{lll} 0 & 1 & 0 \\ 0 & 0 & 2 \end{array}\right)=\left(\begin{array}{ccc} 0 & 3 & 4 \\ 0 & 9 & 12 \end{array}\right), (3926)(001002)=(0039412),

which tells me that

U ∘ T ( x y z ) = ( 3 y + 4 z 9 y + 12 z ) . U \circ T\left(\begin{array}{l} x \\ y \\ z \end{array}\right)=\left(\begin{array}{c} 3 y+4 z \\ 9 y+12 z \end{array}\right) . U∘T xyz =(3y+4z9y+12z).matrices of the same size (and only of the same size), and you do it entry by entry, just the same way that you add two matrices. This has some nice properties, but it has nothing to do model other operations that one does with matrices or vectors. Are there other ways to define the product of two matrices? Sure. Other matrix products. There’s the , which is the “obvious” thing to try: you can multiply two Hadamard product with linear transformations. There’s the , which takes an Kronecker product m × n m ×n m×n matrix times a p × q p ×q p×q matrix and gives an m p × n q m p ×n q mp×nq matrix. This one is associated to the tensor of linear transformations. They are defined differently because they are meant to product

还有其他定义两个矩阵乘积的方式吗?当然有。其他矩阵乘积包括哈达马积:你可以对两个同阶(且只能是同阶)的矩阵进行乘积运算,按元素逐个进行,就像两个矩阵相加一样。它有一些很好的性质,但与线性变换没有关系。还有克罗内克积,它将一个 m × n m×n m×n 矩阵与一个 p × q p×q p×q 矩阵相乘,得到一个 m p × n q mp×nq mp×nq 矩阵。这个乘积与线性变换的张量积相关联。它们的定义不同,因为它们旨在模拟人们对矩阵或向量进行的其他运算。edited Jun 18, 2019 at 9:45 answered Mar 1, 2011 at 20:32 14,Arturo Magidin

Magidin’s 418k 60 862 1.2k an amazing answer. this is better than some textbooks or internet resources – Vass Mar 2, 2011 at 12:53

Magidin 的回答太棒了。这比一些教科书或网络资源都好

is a linear combination also a linear transform? – Vass Mar 2, 2011 at 14:57

线性组合也是线性变换吗?

@Vass: No. A linear transformation is a function from a vector space to a vector space that is additive and homogeneous. A linear combination is the result of adding up scalar multiples of those of vectors vectors; it’s not a function. – Arturo Magidin Mar 2, 2011 at 16:52

2 @Vass:不是。线性变换是从一个向量空间到另一个向量空间的函数,它具有可加性和齐次性。线性组合是向量的标量倍数相加的结果,它不是一个函数。

I think part of the problem people have with getting used to linear transformations vs. matrices is that they have probably never seen an example of a linear transformation defined without reference to a matrix or a basis. So here is such an example. Let V be the vector space of real polynomials of degree at most , and let 3 f : V → V f: V \to V f:V→V be the derivative.

我认为人们在适应线性变换与矩阵的区别时遇到的部分问题是,他们可能从未见过不参考矩阵或基来定义的线性变换的例子。所以这里有一个这样的例子。设 V 是次数至多为 3 的实多项式构成的向量空间,令 f : V → V f: V \to V f:V→V 为求导运算。

V does not come equipped with a natural choice of basis. You might argue that 1 , x , x 2 , x 3 {1, x, x^{2}, x^{3}} 1,x,x2,x3 is natural, but it’s only convenient: there’s no reason to privilege this basis over 1 , ( x + c ) , ( x + c ) 2 , ( x + c ) 3 {1,(x+c),(x+c)^{2},(x+c)^{3}} 1,(x+c),(x+c)2,(x+c)3 for any c ∈ R c \in \mathbb{R} c∈R (and, depending on what my definitions are, it is literally impossible to do so). More generally, { a 0 ( x ) , a 1 ( x ) , a 2 ( x ) , a 3 ( x ) } \{ a_0(x), a_1(x), a_2(x), a_3(x) \} {a0(x),a1(x),a2(x),a3(x)} is a basis for any collection of polynomials a i a_{i} ai of degree . i

V 并没有一个自然的基的选择。你可能会说 { 1 , x , x 2 , x 3 } \{1, x, x^2, x^3\} {1,x,x2,x3} 是自然的,但这只是方便而已:没有理由认为这个基比对于任何 c ∈ R c \in \mathbb{R} c∈R 的 { 1 , ( x + c ) , ( x + c ) 2 , ( x + c ) 3 } \{1, (x+c), (x+c)^2, (x+c)^3\} {1,(x+c),(x+c)2,(x+c)3} 更优越(而且,根据我的定义,实际上也不可能这样做)。更一般地,对于任何一组次数为 i 的多项式 a i a_i ai, { a 0 ( x ) , a 1 ( x ) , a 2 ( x ) , a 3 ( x ) } \{a_0(x), a_1(x), a_2(x), a_3(x)\} {a0(x),a1(x),a2(x),a3(x)} 都是一个基。

include those in the discussion without making an arbitrary choice. It really is just a vector space equipped with a linear transformation. V also does not come equipped with a natural choice of dot product, so there’s no way to

V 也没有一个自然的点积选择,所以无法在不做出任意选择的情况下将点积纳入讨论中。它确实只是一个配备了线性变换的向量空间。

Since we want to talk about composition, let’s write down a second linear transformation. g : V → V g: V \to V g:V→V will send a polynomial p ( x ) p(x) p(x) to the polynomial p ( x + 1 ) p(x+1) p(x+1) . Note that, once again, I do not need to refer to a basis to define . 9

因为我们想讨论复合,所以让我们写出第二个线性变换。 g : V → V g: V \to V g:V→V 将多项式 p ( x ) p(x) p(x) 映射到多项式 p ( x + 1 ) p(x+1) p(x+1)。注意,我再次不需要参考基来定义它。

am doing is composing two functions. Then the abstract composition g f : V → V g f: V \to V gf:V→V is well-defined; it sends a polynomial p ( x ) p(x) p(x) to the polynomial p ′ ( x + 1 ) p'(x+1) p′(x+1) . I don’t need to refer to a basis or multiply any matrices to see this; all I

那么抽象的复合变换 g f : V → V g f: V \to V gf:V→V 是有明确定义的,它将多项式 p ( x ) p(x) p(x) 映射到多项式 p ′ ( x + 1 ) p'(x+1) p′(x+1)。我不需要参考基或进行任何矩阵乘法就能理解这一点,我所做的只是复合两个函数。

Now let’s do everything in a particular basis to see that we get the same answer using the We’ll use the basis correct and natural definition of matrix multiplication. 1 , x , x 2 , x 3 {1, x, x^{2}, x^{3}} 1,x,x2,x3 . In this basis f has matrix

现在让我们在一个特定的基下进行所有操作,以验证使用矩阵乘法的正确且自然的定义会得到相同的结果。我们将使用基 { 1 , x , x 2 , x 3 } \{1, x, x^2, x^3\} {1,x,x2,x3}。在这个基下,f 的矩阵为

[ 0 1 0 0 0 0 2 0 0 0 0 3 0 0 0 0 ] \left[\begin{array}{llll} 0 & 1 & 0 & 0 \\ 0 & 0 & 2 & 0 \\ 0 & 0 & 0 & 3 \\ 0 & 0 & 0 & 0 \end{array}\right] 0000100002000030

and g g g has matrix

g g g 的矩阵为

[ 1 1 1 1 0 1 2 3 0 0 1 3 0 0 0 1 ] \left[\begin{array}{llll} 1 & 1 & 1 & 1 \\ 0 & 1 & 2 & 3 \\ 0 & 0 & 1 & 3 \\ 0 & 0 & 0 & 1 \end{array}\right] 1000110012101331

Now I encourage you to go through all the generalities in Arturo’s post in this example to verify that g f g f gf has the matrix it is supposed to have.

现在,我建议你仔细研究 Arturo 的帖子中关于这个例子的所有一般性结论,以验证 g f g f gf 具有它应有的矩阵。

Intuition behind Matrix Multiplication

矩阵乘法背后的直观理解

If I multiply two numbers, say 3 and 5, I know it means add 3 to itself 5 times or add 5 to itself 3 times.

如果我将两个数相乘,比如 3 和 5,我知道这意味着把 3 加自己 5 次,或者把 5 加自己 3 次。

But If I multiply two matrices, what does it mean? I mean I can’t think it in terms of repetitive addition.

但如果我将两个矩阵相乘,这意味着什么呢?我的意思是,我没法从重复加法的角度去理解它。

What is the intuitive way of thinking about multiplication of matrices?

理解矩阵乘法的直观方式是什么?

edited Aug 10, 2013 at 15:28,Jeel Shah

asked Apr 8, 2011 at 12:41, Happy Mittal

Do you think of 3.9289482948290348290 × 9.2398492482903482390 3.9289482948290348290 \times 9.2398492482903482390 3.9289482948290348290×9.2398492482903482390 as repetitive addition?

你会把 3.9289482948290348290 × 9.2398492482903482390 3.9289482948290348290 \times 9.2398492482903482390 3.9289482948290348290×9.2398492482903482390 理解为重复加法吗?

– lhf,Commented Apr 8, 2011 at 12:43

It depends on what you mean by ‘meaning’.

这取决于你说的“意义”是什么意思。

– Mitch,Commented Apr 8, 2011 at 12:58

I believe a more interesting question is why matrix multiplication is defined the way it is.

我认为一个更有趣的问题是,矩阵乘法为什么是这样定义的。

– M.B.

Commented Apr 8, 2011 at 13:01

You probably want to read this answer of Arturo Magidin’s: Matrix Multiplication: Interpreting and Understanding the Multiplication Process

你或许想看看阿图罗·马吉丁的这个回答:矩阵乘法:解读和理解乘法过程

– Uticensis

Commented Apr 8, 2011 at 16:42

Answers

Matrix “multiplication” is the composition of two linear functions. The composition of two linear functions is a linear function.

矩阵“乘法”是两个线性函数的复合。两个线性函数的复合仍是一个线性函数。

If a linear function is represented by A A A and another by B B B, then A B AB AB is their composition. B A BA BA is their reverse composition.

如果一个线性函数由 A A A 表示,另一个由 B B B 表示,那么 A B AB AB 就是它们的复合, B A BA BA 则是它们的反向复合。

That’s one way of thinking of it. It explains why matrix multiplication is the way it is instead of piecewise multiplication.

这是理解它的一种方式。这解释了为什么矩阵乘法是这样定义的,而不是按分量相乘。

answered Apr 8, 2011 at 13:00

Searke

Actually, I think it’s the only (sensible) way of thinking of it. Textbooks which only give the definition in terms of coordinates, without at least mentioning the connection with composition of linear maps, (such as my first textbook on linear algebra!) do the student a disservice.

事实上,我认为这是(合理的)唯一理解方式。那些只从坐标角度给出定义,却至少不提与线性映射复合之间联系的教科书(比如我的第一本线性代数教材!),是在误导学生。

– wildildildlife

Commented Apr 8, 2011 at 16:26

Learners, couple this knowledge with mathinsight.org/matrices_linear_transformations. It may save you a good amount of time.

学习者们,把这个知识和 mathinsight.org/matrices_linear_transformations 结合起来。这可能会帮你节省相当多的时间。

– n611x007

Commented Jan 30, 2013 at 16:36

Indeed, there is a sense in which all associative binary operators with an identity element are represented as composition of functions - that is the underlying nature of associativity. (If there isn’t an identity, you get a representation as composition, but it might not be faithful.)

的确,在某种意义上,所有具有单位元的结合二元运算都可以表示为函数的复合——这正是结合性的本质。(如果没有单位元,你也能得到一个复合表示,但它可能不是忠实的。)

– Thomas Andrews

Commented Aug 10, 2013 at 15:30

can you give an example of what you mean?

你能举个例子说明你的意思吗?

– JobHunter69

Commented Mar 11, 2016 at 21:18

I found the Wikipedia entry on Linear Maps and specifically the Matrices section very useful to grok this: en.m.wikipedia.org/wiki/Linear_map

我发现维基百科上关于线性映射的条目,特别是其中的矩阵部分,对理解这一点非常有帮助:en.m.wikipedia.org/wiki/Linear_map

– Angad

Commented Dec 3, 2023 at 14:54

Asking why matrix multiplication isn’t just componentwise multiplication is an excellent question: in fact, componentwise multiplication is in some sense the most “natural” generalization of real multiplication to matrices: it satisfies all of the axioms you would expect (associativity, commutativity, existence of identity and inverses (for matrices with no 0 entries), distributivity over addition).

问为什么矩阵乘法不只是按分量相乘,这是一个很好的问题:事实上,在某种意义上,按分量相乘是实数乘法到矩阵的最“自然”的推广:它满足所有你期望的公理(结合律、交换律、单位元与逆元的存在性(对于没有 0 元素的矩阵)、对加法的分配律)。

The usual matrix multiplication in fact “gives up” commutativity; we all know that in general A B ≠ B A AB \neq BA AB=BA while for real numbers a b = b a ab = ba ab=ba. What do we gain? Invariance with respect to change of basis. If P P P is an invertible matrix,

通常的矩阵乘法实际上“放弃”了交换律;我们都知道,一般来说 A B ≠ B A AB \neq BA AB=BA,而对于实数, a b = b a ab = ba ab=ba。但我们得到了什么呢?是关于基变换的不变性。如果 P P P 是一个可逆矩阵,

P − 1 A P + P − 1 B P = P − 1 ( A + B ) P ( P − 1 A P ) ( P − 1 B P ) = P − 1 ( A B ) P \begin{align*} {{P}^{-1}}AP+{{P}^{-1}}BP & ={{P}^{-1}}(A+B)P \\ ({{P}^{-1}}AP)({{P}^{-1}}BP) & ={{P}^{-1}}(AB)P \end{align*} P−1AP+P−1BP(P−1AP)(P−1BP)=P−1(A+B)P=P−1(AB)P

In other words, it doesn’t matter what basis you use to represent the matrices A A A and B B B, no matter what choice you make their sum and product is the same.

换句话说,你用什么基来表示矩阵 A A A 和 B B B 并不重要,无论你选择什么基,它们的和与积都是一样的。

It is easy to see by trying an example that the second property does not hold for multiplication defined component-wise. This is because the inverse of a change of basis P − 1 P^{-1} P−1 no longer corresponds to the multiplicative inverse of P P P.

通过举例很容易看出,对于按分量定义的乘法,第二个性质并不成立。这是因为基变换的逆 P − 1 P^{-1} P−1 不再对应于 P P P 的乘法逆。

edited Jul 28, 2020 at 9:49, bilibraker

answered Apr 8, 2011 at 15:41, user7530

+1, but I can’t help but point out that if componentwise multiplication is the most “natural” generalization, it is also the most boring generalization, in that under componentwise operations, a matrix is just a flat collection of m n mn mn real numbers instead of being a new and useful structure with interesting properties.

+1,但我忍不住要指出,如果按分量相乘是最“自然”的推广,那它也是最“无聊”的推广,因为在按分量运算下,矩阵只不过是 m n mn mn 个实数的扁平集合,而不是一个具有有趣性质的新的、有用的结构。

– user856, Commented Apr 8, 2011 at 17:09

I don’t get it. For element-wise multiplication, we would define P − 1 P^{-1} P−1 as essentially element-wise reciprocals, so P P − 1 PP^{-1} PP−1 would be the identity, and the second property you mention would still hold. It is the essence of P − 1 P^{-1} P−1 to be the inverse of P P P. How else would you define P − 1 P^{-1} P−1?

我不明白。对于按元素相乘,我们可以把 P − 1 P^{-1} P−1 定义为本质上是按元素取倒数,这样 P P − 1 PP^{-1} PP−1 就是单位矩阵,你提到的第二个性质仍然成立。 P − 1 P^{-1} P−1 的本质就是 P P P 的逆。不然你还能怎么定义 P − 1 P^{-1} P−1 呢?

– Yatharth Agarwal, Commented Nov 3, 2018 at 20:27

I also don’t get it. Under elementwise multiplication, we have commutativity. Then P − 1 A P = A P^{-1}AP = A P−1AP=A, a change of basis does nothing, and showing we have the two invariance properties is trivial.

我也不明白。在按元素相乘下,我们有交换律。那么 P − 1 A P = A P^{-1}AP = A P−1AP=A,基变换什么都不做,这两个不变性性质的成立就很显然了。

– Accidental Statistician, Commented Sep 8, 2021 at 23:14

@AccidentalStatistician Change of basis means: take a vector expressed as a linear combination in one basis, and find the linear combination in a different basis that yields the same vector. This operation is independent of how you define or “redefine” multiplication. If ⊙ is componentwise multiplication, then matrices transform under change of basis like P − 1 A ⊙ P P^{-1}A⊙P P−1A⊙P. The second multiplication is componentwise but the inverse and first multiplication is still the usual one.

@偶然统计学家 基变换的意思是:取一个在某个基下表示为线性组合的向量,然后找到在另一个基下能得到同一个向量的线性组合。这个操作与你如何定义或“重新定义”乘法无关。如果 ⊙ 是按分量相乘,那么矩阵在基变换下的变换形式是 P − 1 A ⊙ P P^{-1}A⊙P P−1A⊙P。第二个乘法是按分量的,但逆运算和第一个乘法仍然是通常的那种。

– user7530

Commented Sep 8, 2021 at 23:50, By Flanigan & Kazdan:

Instead of looking at a “box of numbers”, look at the “total action” after applying the whole thing. It’s an automorphism of linear spaces, meaning that in some vector-linear-algebra-type situation this is “turning things over and over in your hands without breaking the algebra that makes it be what it is”. (Modulo some things—like maybe you want a constant determinant.)

不要只看一个“数字盒子”,而要看看应用整个矩阵后的“整体作用”。它是线性空间的自同构,意思是在某些向量线性代数的情境中,这就像是“在手中反复翻转东西,却不破坏使其成为自身的代数结构”。(除了一些东西——比如你可能想要一个常数行列式。)

This is also why order matters: if you compose the matrices in one direction it might not be the same as the other.

这也是为什么顺序很重要:按一个顺序复合矩阵,结果可能和按另一个顺序复合不一样。

4 1 □ 3 2 → V ↕ 1 4 □ 2 3 → Θ 90 ↷ 2 1 □ 3 4 ^1_4 \Box ^2_3 {} \xrightarrow{\mathbf{V} \updownarrow} {} ^4_1 \Box ^3_2 {} \xrightarrow{\Theta_{90} \curvearrowright} {} ^1_2 \Box ^4_3 41□32V↕14□23Θ90↷21□34

versus

4 1 □ 3 2 → Θ 90 ↷ 3 4 □ 2 1 → V ↕ 4 3 □ 1 2 ^1_4 \Box ^2_3 {} \xrightarrow{\Theta_{90} \curvearrowright} {} ^4_3 \Box ^1_2 {} \xrightarrow{\mathbf{V} \updownarrow} {} ^3_4 \Box ^2_1 41□32Θ90↷34□21V↕43□12

The actions can be composed (one after the other)—that’s what multiplying matrices does. Eventually the matrix representing the overall cumulative effect of whatever things you composed, should be applied to something. For this you can say “the plane”, or pick a few points, or draw an F, or use a real picture (computers are good at linear transformations after all).

这些作用可以复合(一个接一个)——这就是矩阵相乘的意义。最终,代表你所复合的所有东西的整体累积效果的矩阵,应该应用到某个对象上。对此,你可以说“平面”,或者选几个点,或者画一个 F,或者用一张真实的图片(毕竟计算机很擅长线性变换)。

You could also watch the matrices work on Mona step by step too, to help your intuition.

你也可以一步步观察矩阵对蒙娜丽莎的作用,以帮助建立直观理解。

Finally I think you can think of matrices as “multidimensional multiplication”.

最后,我认为你可以把矩阵看作“多维乘法”。

y = m x + b y = mx + b y=mx+b is affine; the truly “linear” (keeping 0 ⟼ f 0 0 \overset{f}{\longmapsto} 0 0⟼f0) would be less complicated: just

y = m x y = mx y=mx

(eg. ) which is an “even” stretching/dilation.

(例如 ),这是一种“均匀的”拉伸/伸缩。

y ⃗ = [ M ] x ⃗ \vec{y}=\left[ \mathbf{M} \right] \vec{x} y=[M]x

really is the multi-dimensional version of the same thing, it’s just that when you have multiple numbers in each x x x, each of the dimensions can impinge on each other

确实是同一件事的多维版本,只是当每个 x x x 中有多个数时,每个维度都可能相互影响

for example in the case of a rotation—in physics it doesn’t matter which orthonormal coordinate system you choose, so we want to “quotient away” that invariant our physical theories.

例如在旋转的情况下——在物理学中,你选择哪个正交坐标系并不重要,所以我们希望在物理理论中“剔除”这种不变性。

edited Feb 10, 2019 at 22:10, Glorfindel

answered Oct 3, 2014 at 5:39, isomorphismes

The short answer is that a matrix corresponds to a linear transformation. To multiply two matrices is the same thing as composing the corresponding linear transformations (or linear maps).

简而言之,一个矩阵对应一个线性变换。相乘两个矩阵等同于复合相应的线性变换(或线性映射)。

The following is covered in a text on linear algebra (such as Hoffman-Kunze):

以下内容在线性代数教材(如霍夫曼-孔泽的教材)中会讲到:

This makes most sense in the context of vector spaces over a field. You can talk about vector spaces and (linear) maps between them without ever mentioning a basis. When you pick a basis, you can write the elements of your vector space as a sum of basis elements with coefficients in your base field (that is, you get explicit coordinates for your vectors in terms of for instance real numbers). If you want to compute something, you typically pick bases for your vector spaces. Then you can represent your linear map as a matrix with respect to the given bases, with entries in your base field (see e.g. the above mentioned book for details as to how). We define matrix multiplication such that matrix multiplication corresponds to composition of the linear maps.

这在域上的向量空间背景下最有意义。你可以讨论向量空间以及它们之间的(线性)映射,而完全不提基。当你选定一个基时,你可以把向量空间的元素写成基元素的线性组合,系数来自基域(也就是说,你得到了向量的明确坐标,例如用实数表示)。如果你想计算什么,通常会为向量空间选定基。然后,你可以将线性映射用关于给定基的矩阵来表示,矩阵的元素来自基域(如何表示的细节可参见上述提到的教材)。我们定义矩阵乘法,使得矩阵乘法对应于线性映射的复合。

Added (Details on the presentation of a linear map by a matrix). Let V V V and W W W be two vector spaces with ordered bases e 1 , … , e n e_1,\ldots,e_n e1,…,en and f 1 , … , f m f_1,\ldots,f_m f1,…,fm respectively, and L : V → W L:V \to W L:V→W a linear map.

补充(线性映射由矩阵表示的细节)。设 V V V 和 W W W 是两个向量空间,分别具有有序基 e 1 , … , e n e_1,\ldots,e_n e1,…,en 和 f 1 , … , f m f_1,\ldots,f_m f1,…,fm,且 L : V → W L:V \to W L:V→W 是一个线性映射。

First note that since the e j e_j ej generate V V V and L L L is linear, L L L is completely determined by the images of the e j e_j ej in W W W, that is, L ( e j ) L(e_j) L(ej). Explicitly, note that by the definition of a basis any v ∈ V v \in V v∈V has a unique expression of the form a 1 e 1 + ⋯ + a n e n a_1e_1+\cdots+a_ne_n a1e1+⋯+anen, and L L L applied to this pans out as a 1 L ( e 1 ) + ⋯ + a n L ( e n ) a_1L(e_1)+\cdots+a_nL(e_n) a1L(e1)+⋯+anL(en).

首先注意,由于 e j e_j ej 张成 V V V 且 L L L 是线性的, L L L 完全由 e j e_j ej 在 W W W 中的像,即 L ( e j ) L(e_j) L(ej) 所确定。具体来说,由基的定义可知,任何 v ∈ V v \in V v∈V 都有唯一的表达式 a 1 e 1 + ⋯ + a n e n a_1e_1+\cdots+a_ne_n a1e1+⋯+anen,将 L L L 应用于该式可得 a 1 L ( e 1 ) + ⋯ + a n L ( e n ) a_1L(e_1)+\cdots+a_nL(e_n) a1L(e1)+⋯+anL(en)。

Now, since L ( e j ) L(e_j) L(ej) is in W W W it has a unique expression of the form b 1 f 1 + ⋯ + b m f m b_1f_1+\cdots+b_mf_m b1f1+⋯+bmfm, and it is clear that the value of e j e_j ej under L L L is uniquely determined by b 1 , … , b m b_1,\ldots,b_m b1,…,bm, the coefficients of L ( e j ) L(e_j) L(ej) with respect to the given ordered basis for W W W. In order to keep track of which L ( e j ) L(e_j) L(ej) the b i b_i bi are meant to represent, we write (abusing notation for a moment) m i j = b i m_{ij} = b_i mij=bi, yielding the matrix ( m i j ) (m_{ij}) (mij) of L L L with respect to the given ordered bases.

现在,由于 L ( e j ) L(e_j) L(ej) 在 W W W 中,它有唯一的表达式 b 1 f 1 + ⋯ + b m f m b_1f_1+\cdots+b_mf_m b1f1+⋯+bmfm,显然, e j e_j ej 在 L L L 作用下的值由 b 1 , … , b m b_1,\ldots,b_m b1,…,bm 唯一确定,这些是 L ( e j ) L(e_j) L(ej) 关于给定的 W W W 的有序基的系数。为了记录 b i b_i bi 对应的是哪个 L ( e j ) L(e_j) L(ej),我们(暂时滥用符号)记 m i j = b i m_{ij} = b_i mij=bi,从而得到 L L L 关于给定有序基的矩阵 ( m i j ) (m_{ij}) (mij)。

This might be enough to play around with why matrix multiplication is defined the way it is. Try for instance a single vector space V V V with basis e 1 , … , e n e_1,\ldots,e_n e1,…,en, and compute the corresponding matrix of the square L 2 = L ∘ L L^2 = L \circ L L2=L∘L of a single linear transformation L : V → V L:V \to V L:V→V, or say, compute the matrix corresponding to the identity transformation v ↦ v v \mapsto v v↦v.

这可能足以帮助理解矩阵乘法为什么是这样定义的。例如,取一个具有基 e 1 , … , e n e_1,\ldots,e_n e1,…,en 的向量空间 V V V,计算单个线性变换 L : V → V L:V \to V L:V→V 的平方 L 2 = L ∘ L L^2 = L \circ L L2=L∘L 对应的矩阵,或者计算恒等变换 v ↦ v v \mapsto v v↦v 对应的矩阵。

edited Jul 4, 2015 at 8:29, answered Apr 8, 2011 at 13:04, Eivind Dahl

First, Understand Vector multiplication by a scalar.

首先,理解向量与标量的乘法。

Then, think on a Matrix, multiplicated by a vector. The Matrix is a “vector of vectors”.

然后,思考矩阵与向量的乘法。矩阵是一个“向量的向量”。

Finally, Matrix × Matrix extends the former concept.

最后,矩阵×矩阵是对前一个概念的扩展。

answered Apr 8, 2011 at 22:56, hh

This is a great answer. Now explained enough though. It is a similar way to how Gilbert Strang ‘builds’ up matrix multiplication in his video lectures.

这是一个很棒的回答。不过还可以再解释得充分些。这和吉尔伯特·斯特朗在他的视频讲座中“构建”矩阵乘法的方式很相似。

– Vass,Commented May 18, 2011 at 15:49

This is the best answer so far as you do not need to know about transformations.

这是目前最好的答案,因为你不需要了解变换。

– matqkks, Commented Aug 6, 2013 at 20:14

The problem with this approach is to motivate the multiplication of a matrix by a vector.

这种方法的问题在于,它难以解释矩阵与向量相乘的动机。

– Maxis Jaisi,Commented May 19, 2017 at 6:30

Apart from the interpretation as the composition of linear functions (which is, in my opinion, the most natural one), another viewpoint is on some occasions useful.

除了将其理解为线性函数的复合(在我看来,这是最自然的一种理解)之外,另一种观点在某些情况下也很有用。

You can view them as something akin to a generalization of elementary row/column operations. If you compute A B AB AB, then the coefficients in j j j-th row of A A A tell you, which linear combination of rows of B B B you should compute and put into the j j j-th row of the new matrix.

你可以把它们看作是初等行/列变换的一种推广。如果你计算 A B AB AB,那么 A A A 的第 j j j 行的系数会告诉你,应该计算 B B B 的哪些行的线性组合,并将其放入新矩阵的第 j j j 行。

Similarly, you can view A B AB AB as making linear combinations of columns of A A A, with the coefficients prescribed by the matrix B B B.

类似地,你可以把 A B AB AB 看作是对 A A A 的列进行线性组合,组合的系数由矩阵 B B B 规定。

With this viewpoint in mind you can easily see that, if you denote by a ⃗ 1 , … , a ⃗ k \vec{a}_1,\ldots,\vec{a}_k a1,…,ak the rows of the matrix A A A, then the equality

考虑到这个观点,你可以很容易地看出,如果用 a ⃗ 1 , … , a ⃗ k \vec{a}_1,\ldots,\vec{a}_k a1,…,ak 表示矩阵 A A A 的行,那么下面的等式成立:

( a ⃗ 1 ⋮ a ⃗ k ) B = ( a ⃗ 1 B ⋮ a ⃗ k B ) \begin{pmatrix} \vec{a}_1 \\ \vdots \\ \vec{a}_k \end{pmatrix} B = \begin{pmatrix} \vec{a}_1B \\ \vdots \\ \vec{a}_kB \end{pmatrix} a1⋮ak B= a1B⋮akB

holds. (Of course, you can obtain this equality directly from definition or by many other methods. My intention was to illustrate a situation, when familiarity this viewpoint could be useful.)

(当然,你可以直接从定义或通过许多其他方法得到这个等式。我的目的是说明,熟悉这个观点在某些情况下是有用的。)

edited Jan 5, 2017 at 9:14, answered Apr 8, 2011 at 13:28, Martin Sleziak

I came here to talk about composition of linear functions, or elementary row/column operations. But you already said it all. Great job, and +1 also you would deserve more.

我是来讨论线性函数的复合,或者初等行/列变换的。但你已经把所有都说了。做得好,+1,而且你值得更多赞。

– Wok, Commented Apr 8, 2011 at 21:01

Suppose

假设

p = 2 x + 3 y q = 3 x − 7 y r = − 8 x + 9 y \begin{align*} p & =2x+3y \\ q & =3x-7y \\ r & =-8x+9y \end{align*} pqr=2x+3y=3x−7y=−8x+9y

Represent this way from transforming [ x y ] [x \ y] [x y] to [ p q r ] \begin{bmatrix} p \\ q \\ r \end{bmatrix}

pqr

by the matrix

用矩阵将 [ x y ] [x \ y] [x y] 变换为 [ p q r ] \begin{bmatrix} p \\ q \\ r \end{bmatrix}

pqr

,表示如下:

[ 2 3 3 − 7 − 8 9 ] \begin{bmatrix} 2 & 3 \\ 3 & -7 \\ -8 & 9 \end{bmatrix} 23−83−79

Now let’s transform [ p q r ] \begin{bmatrix} p \\ q \\ r \end{bmatrix}

pqr

to [ a b ] [a \ b] [a b]:

现在让我们将 [ p q r ] \begin{bmatrix} p \\ q \\ r \end{bmatrix}

pqr

变换为 [ a b ] [a \ b] [a b]:

a = 22 p − 38 q + 17 r b = 13 p + 10 q + 9 r \begin{align*} a & =22p-38q+17r \\ b & =13p+10q+9r \end{align*} ab=22p−38q+17r=13p+10q+9r

represent that by the matrix

用矩阵表示如下:

[ 22 13 − 38 10 17 9 ] \begin{bmatrix} 22 & 13 \\ -38 & 10 \\ 17 & 9 \end{bmatrix} 22−381713109

So how do we transform [ x y ] [x \ y] [x y] directly to [ a b ] [a \ b] [a b]?

那么,我们如何将 [ x y ] [x \ y] [x y] 直接变换为 [ a b ] [a \ b] [a b] 呢?

Do a bit of algebra and you get

做一点代数运算,你会得到

a = ∙ x + ∙ y b = ∙ x + ∙ y \begin{align*} a & = \bullet\, x + \bullet\, y \\ b & = \bullet\, x + \bullet\, y \end{align*} ab=∙x+∙y=∙x+∙y

and you should be able to figure out what numbers the four ∙ \bullet ∙s are. That matrix of four ∙ \bullet ∙s is what you get when you multiply those earlier matrices. That’s why matrix multiplication is defined the way it is.

你应该能弄清楚这四个 ∙ \bullet ∙ 是什么数字。这个由四个 ∙ \bullet ∙ 组成的矩阵,就是你将前面那些矩阵相乘得到的结果。这就是矩阵乘法为什么是这样定义的原因。

edited Jul 14, 2015 at 20:13, answered Jul 7, 2013 at 21:06, Michael Hardy

Perhaps a link to the other answer with some explanation of how it applies to this problem would be better than duplicating an answer. This meta question, and those cited therein, is a good discussion of the concerns.

或许,提供一个指向另一个回答的链接,并解释它如何适用于这个问题,会比重复一个回答更好。这个元问题以及其中引用的内容,对相关问题进行了很好的讨论。

– robjohn, Commented Jul 8, 2013 at 14:22

I like this answer. As linear algebra is really about linear systems so this answer fits in with that definition of linear algebra.

我喜欢这个回答。因为线性代数确实是关于线性方程组的,所以这个回答与线性代数的这一定义相契合。

– matqkks, Commented Aug 6, 2013 at 20:16

@Guandalino: It was not “obtained”; rather it is part of that from which one obtains something else, where the four ∙ \bullet ∙s appear.

@瓜达利诺:它们不是“得到的”;相反,它们是用来得到其他东西(即那四个 ∙ \bullet ∙ 出现的地方)的一部分。

– Michael Hardy, Commented Jun 27, 2014 at 16:38

That’s what I thought you meant. If someone is telling you how to calculate 42 × 19 42 \times 19 42×19, getting 798 798 798, then it makes sense to wonder how 798 798 798 was calculated, but 42 42 42 and 19 19 19 were not somehow calculated; rather they were chosen as an example. The same is true of the numbers you mention.

我就是这么理解你的意思的。如果有人告诉你如何计算 42 × 19 42 \times 19 42×19 得到 798 798 798,那么想知道 798 798 798 是怎么算出来的是合理的,但 42 42 42 和 19 19 19 并不是通过某种计算得到的;它们只是被选作例子。你提到的这些数字也是如此。

– Michael Hardy, Commented Jun 27, 2014 at 19:31

To get away from how these two kinds of multiplications are implemented (repeated addition for numbers vs. row/column dot product for matrices) and how they behave symbolically/algebraically (associativity, distribute over ‘addition’, have annihilators, etc), I’d like to answer in the manner ‘what are they good for?’

抛开这两种乘法的实现方式(数字的重复加法 vs 矩阵的行/列点积)以及它们在符号/代数层面的性质(结合律、对“加法”的分配律、有零因子等)不谈,我想以“它们有什么用”的方式来回答。

Multiplication of numbers gives area of a rectangle with sides given by the multiplicands (or number of total points if thinking discretely).

数字的乘法给出了以乘数为边长的矩形的面积(如果从离散角度考虑,就是总点数)。

Multiplication of matrices (since it involves quite a few more actual numbers than just simple multiplication) is understandably quite a bit more complex. Though the composition of linear functions (as mentioned elsewhere) is the essence of it, that’s not the most intuitive description of it (to someone without the abstract algebraic experience). A more visual intuition is that one matrix, multiplying with another, results in the transformation of a set of points (the columns of the right-hand matrix) into new set of points (the columns of the resulting matrix). That is, take a set of n n n points in n n n dimensions, put them as columns in an n × n n \times n n×n matrix; if you multiply this from the left by another n × n n \times n n×n matrix, you’ll transform that ‘cloud’ of points to a new cloud.

矩阵的乘法(由于它比简单的乘法涉及更多的实际数字)显然要复杂得多。尽管线性函数的复合(如其他地方所提到的)是其本质,但对于没有抽象代数经验的人来说,这并不是最直观的描述。一种更视觉化的直观理解是,一个矩阵与另一个矩阵相乘,会将一组点(右手边矩阵的列)变换为一组新的点(结果矩阵的列)。也就是说,取 n n n 维空间中的 n n n 个点,将它们作为列放入一个 n × n n \times n n×n 矩阵中;如果用另一个 n × n n \times n n×n 矩阵从左边乘它,你会把这团“点云”变换成新的点云。

This transformation isn’t some random thing; it might rotate the cloud, it might expand the cloud, it won’t ‘translate’ the cloud, it might collapse the cloud into a line (a lower number of dimensions might be needed). But it’s transforming the whole cloud all at once smoothly (near points get translated to near points).

这种变换不是随机的;它可能会旋转点云,可能会扩张点云,不会“平移”点云,可能会把点云压缩成一条线(可能需要更低的维度)。但它会一次性平滑地变换整个点云(邻近的点会变换到邻近的点)。

So that is one way of getting the ‘meaning’ of matrix multiplication.

这就是理解矩阵乘法“意义”的一种方式。

I have a hard time getting a good metaphor (any metaphor) between matrix multiplication and simple numerical multiplication so I won’t force it - hopefully someone else might be able to come up with a better visualization that shows how they’re more alike beyond the similarity of some algebraic properties.

我很难在矩阵乘法和简单的数字乘法之间找到一个好的比喻(任何比喻都很难),所以我不会强求——希望其他人能想出一个更好的可视化方式,来展示除了一些代数性质的相似性之外,它们还有哪些更相似的地方。

edited Sep 17, 2015 at 13:13, answered Apr 8, 2011 at 20:53,Mitch

You shouldn’t try to think in terms of scalars and try to fit matrices into this way of thinking. It’s exactly like with real and complex numbers. It’s difficult to have an intuition about complex operations if you try to think in terms of real operations.

你不应该从标量的角度去思考,试图把矩阵硬塞进这种思维方式里。这和实数与复数的情况完全一样。如果你试图从实数运算的角度去理解复数运算,很难形成直观感受。

Scalars are a special case of matrices, as real numbers are a special case of complex numbers.

标量是矩阵的特例,就像实数是复数的特例一样。

So you need to look at it from the other, more abstract side. If you think about real operations in terms of complex operations, they make complete sense (they are a simple case of the complex operations).

所以你需要从另一个更抽象的角度来看待它。如果你从复数运算的角度去理解实数运算,它们就完全说得通了(它们是复数运算的简单情况)。

And the same is true for Matrices and scalars. Think in terms of matrix operations and you will see that the scalar operations are a simple (special) case of the corresponding matrix operations.

矩阵和标量的情况也是如此。从矩阵运算的角度去思考,你会发现标量运算只是相应矩阵运算的简单(特殊)情况。

edited Feb 21, 2016 at 23:24, John B

answered Apr 8, 2011 at 17:38, user9310

Let’s explain where matrices and matrix multiplication come from. But first a bit of notation: e i e_i ei denotes the column vector in R n \mathbb{R}^n Rn which has a 1 in the i i ith position and zeros elsewhere:

让我们解释一下矩阵和矩阵乘法的来源。但首先说明一点符号: e i e_i ei 表示 R n \mathbb{R}^n Rn 中的列向量,它在第 i i i 个位置上是 1,其他位置都是 0:

e 1 = [ 1 0 0 ⋮ 0 ] , e 2 = [ 0 1 0 ⋮ 0 ] , … , e n = [ 0 0 0 ⋮ 1 ] e_1 = \begin{bmatrix} 1 \\ 0 \\ 0 \\ \vdots \\ 0 \end{bmatrix}, \quad e_2 = \begin{bmatrix} 0 \\ 1 \\ 0 \\ \vdots \\ 0 \end{bmatrix}, \quad \ldots, \quad e_n = \begin{bmatrix} 0 \\ 0 \\ 0 \\ \vdots \\ 1 \end{bmatrix} e1= 100⋮0 ,e2= 010⋮0 ,…,en= 000⋮1

Suppose that T : R n → R m T: \mathbb{R}^n \to \mathbb{R}^m T:Rn→Rm is a linear transformation. The matrix

假设 T : R n → R m T: \mathbb{R}^n \to \mathbb{R}^m T:Rn→Rm 是一个线性变换。矩阵

A = [ T ( e 1 ) T ( e 2 ) ⋯ T ( e n ) ] A = [T(e_1) \ T(e_2) \ \cdots \ T(e_n)] A=[T(e1) T(e2) ⋯ T(en)]

is called the matrix description of T T T. If we are given A A A, then we can easily compute T ( x ) T(x) T(x) for any vector x ∈ R n x \in \mathbb{R}^n x∈Rn, as follows: If

称为 T T T 的矩阵表示。如果我们已知 A A A,那么我们可以很容易地计算任何向量 x ∈ R n x \in \mathbb{R}^n x∈Rn 在 T T T 作用下的结果 T ( x ) T(x) T(x),如下所示:如果

x = [ x 1 x 2 ⋮ x n ] x = \begin{bmatrix} x_1 \\ x_2 \\ \vdots \\ x_n \end{bmatrix} x= x1x2⋮xn

then

那么

T ( x ) = T ( x 1 e 1 + ⋯ + x n e n ) = x 1 T ( e 1 ) ⏟ known + ⋯ + x n T ( e n ) ⏟ known . (1) \tag{1}T(x) = T(x_1 e_1 + \cdots + x_n e_n) = x_1 \underbrace{T(e_1)}_{\text{known}} + \cdots + x_n \underbrace{T(e_n)}_{\text{known}}. T(x)=T(x1e1+⋯+xnen)=x1known

T(e1)+⋯+xnknown

T(en).(1)

In other words, T ( x ) T(x) T(x) is a linear combination of the columns of A A A. To save writing, we will denote this particular linear combination as A x Ax Ax:

换句话说, T ( x ) T(x) T(x) 是 A A A 的列的线性组合。为了简化书写,我们将这种特定的线性组合记为 A x Ax Ax:

A x = x 1 ⋅ ( first column of A ) + x 2 ⋅ ( second column of A ) + ⋯ + x n ⋅ ( final column of A ) Ax = x_1 \cdot (\text{first column of } A) + x_2 \cdot (\text{second column of } A) + \cdots + x_n \cdot (\text{final column of } A) Ax=x1⋅(first column of A)+x2⋅(second column of A)+⋯+xn⋅(final column of A)

With this notation, the equation can be written as

有了这个符号,等式可以写成

T ( x ) = A x T(x) = Ax T(x)=Ax

Now suppose that T : R n → R m T: \mathbb{R}^n \to \mathbb{R}^m T:Rn→Rm and S : R m → R p S: \mathbb{R}^m \to \mathbb{R}^p S:Rm→Rp are linear transformations, and that T T T and S S S are represented by the matrices A A A and B B B respectively. Let a i a_i ai be the i i ith column of A A A.

现在假设 T : R n → R m T: \mathbb{R}^n \to \mathbb{R}^m T:Rn→Rm 和 S : R m → R p S: \mathbb{R}^m \to \mathbb{R}^p S:Rm→Rp 是线性变换,且 T T T 和 S S S 分别由矩阵 A A A 和 B B B 表示。设 a i a_i ai 是 A A A 的第 i i i 列。

Question: What is the matrix description of S ∘ T S \circ T S∘T?

问题: S ∘ T S \circ T S∘T 的矩阵表示是什么?

Answer: If C C C is the matrix description of S ∘ T S \circ T S∘T, then the i i ith column of C C C is

答案:如果 C C C 是 S ∘ T S \circ T S∘T 的矩阵表示,那么 C C C 的第 i i i 列是

c i = ( S ∘ T ) ( e i ) = S ( T ( e i ) ) = S ( a i ) = B a i c_i = (S \circ T)(e_i) = S(T(e_i)) = S(a_i) = Ba_i ci=(S∘T)(ei)=S(T(ei))=S(ai)=Bai

For this reason, we define the “product” of B B B and A A A to be the matrix whose i i ith column is B a i Ba_i Bai. With this notation, the matrix description of S ∘ T S \circ T S∘T is

出于这个原因,我们定义 B B B 和 A A A 的“乘积”为这样一个矩阵:它的第 i i i 列是 B a i Ba_i Bai。有了这个符号, S ∘ T S \circ T S∘T 的矩阵表示是

C = B A C = BA C=BA

If you write everything out in terms of components, you will observe that this definition agrees with the usual definition of matrix multiplication.

如果你用分量把所有东西都写出来,你会发现这个定义与通常的矩阵乘法定义是一致的。

That is how matrices and matrix multiplication can be discovered.

这就是矩阵和矩阵乘法的由来。

answered Dec 24, 2018 at 7:21, littleO

via:

直观理解线性代数的一些概念 - Spark & Shine

https://sparkandshine.net/understanding-linear-algebra-intuitively-some-concepts/What is the difference between matrix theory and linear algebra? _

https://math.stackexchange.com/questions/996/what-is-the-difference-between-matrix-theory-and-linear-algebramatrices - Intuition behind Matrix Multiplication - Mathematics Stack Exchange

https://math.stackexchange.com/questions/31725/intuition-behind-matrix-multiplicationlinear algebra - Matrix multiplication: interpreting and understanding the process

https://math.stackexchange.com/questions/24456/matrix-multiplication-interpreting-and-understanding-the-process/24469#24469linear algebra - Is there a relationship between vector spaces and fields/rings/groups? - asked Sep 7, 2015 at 17:47

https://math.stackexchange.com/questions/1425631/is-there-a-relationship-between-vector-spaces-and-fields-rings-groups