@浙大疏锦行作业:

kaggle找到一个图像数据集,用cnn网络进行训练并且用grad-cam做可视化

进阶:并拆分成多个文件

我找的是kaggle上的猫狗二分类训练集

import torch

import torch.nn as nn

import torch.nn.functional as F

import torchvision

import torchvision.datasets as datasets

import torchvision.transforms as transforms

import numpy as np

import matplotlib.pyplot as plt

from PIL import Image

import os

import warnings

from torch.utils.data import random_split

warnings.filterwarnings("ignore")

# 设置中文字体支持

plt.rcParams["font.family"] = ["SimHei", "DejaVu Sans"]

plt.rcParams['axes.unicode_minus'] = False

# 设置随机种子

torch.manual_seed(42)

np.random.seed(42)

# 检查GPU可用性

device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

print(f"使用设备: {device}")

# 数据预处理和增强

train_transform = transforms.Compose([

transforms.Resize((224, 224)),

transforms.RandomHorizontalFlip(),

transforms.RandomRotation(10),

transforms.ColorJitter(brightness=0.2, contrast=0.2, saturation=0.2),

transforms.ToTensor(),

transforms.Normalize([0.485, 0.456, 0.406], [0.229, 0.224, 0.225])

])

test_transform = transforms.Compose([

transforms.Resize((224, 224)),

transforms.ToTensor(),

transforms.Normalize([0.485, 0.456, 0.406], [0.229, 0.224, 0.225])

])

# 加载数据集

train_dataset = datasets.ImageFolder(

root=r'D:\BaiduNetdiskDownload\kaggle\kaggle\train\train',

transform=train_transform

)

trainloader = torch.utils.data.DataLoader(

train_dataset,

batch_size=64,

shuffle=True,

num_workers=0

)

# 加载数据集

test_dataset = datasets.ImageFolder(

root=r'D:\BaiduNetdiskDownload\kaggle\kaggle\test1',

transform=test_transform

)

testloader = torch.utils.data.DataLoader(

test_dataset,

batch_size=64,

shuffle=False, # 测试集不需要打乱

num_workers=0

)

# 打印数据集信息

print(f"训练集大小: {len(train_dataset)}")

print(f"类别数量: {len(train_dataset.classes)}")

print(f"类别名称: {train_dataset.classes}")

print(f"测试集大小: {len(test_dataset)}")

# 修正后的CNN模型

class SimpleCNN(nn.Module):

def __init__(self, num_classes=2): # 猫狗二分类,所以num_classes=2

super(SimpleCNN, self).__init__()

self.conv1 = nn.Conv2d(3, 32, kernel_size=3, padding=1)

self.conv2 = nn.Conv2d(32, 64, kernel_size=3, padding=1)

self.conv3 = nn.Conv2d(64, 128, kernel_size=3, padding=1)

self.pool = nn.MaxPool2d(2, 2)

# 修正全连接层输入尺寸

# 输入图像224x224,经过3次池化后变为28x28 (224/2^3=28)

self.fc1 = nn.Linear(128 * 28 * 28, 512)

self.fc2 = nn.Linear(512, num_classes) # 输出类别数改为2

self.dropout = nn.Dropout(0.5) # 添加dropout防止过拟合

def forward(self, x):

x = self.pool(F.relu(self.conv1(x)))

x = self.pool(F.relu(self.conv2(x)))

x = self.pool(F.relu(self.conv3(x)))

# 展平特征图

x = x.view(-1, 128 * 28 * 28)

x = self.dropout(F.relu(self.fc1(x)))

x = self.fc2(x)

return x

# 初始化模型

model = SimpleCNN(num_classes=len(train_dataset.classes))

model = model.to(device)

print("模型已创建")

# 评估模型函数 - 先定义这个函数

def evaluate_model(model):

model.eval() # 设置模型为评估模式

correct = 0

total = 0

with torch.no_grad():

for data in testloader:

images, labels = data

images, labels = images.to(device), labels.to(device)

outputs = model(images)

_, predicted = torch.max(outputs.data, 1)

total += labels.size(0)

correct += (predicted == labels).sum().item()

accuracy = 100 * correct / total

model.train() # 恢复训练模式

return accuracy

# 训练模型

def train_model(model, epochs=20): # 增加训练轮数

criterion = nn.CrossEntropyLoss()

optimizer = torch.optim.Adam(model.parameters(), lr=0.001)

# 学习率调度器

scheduler = torch.optim.lr_scheduler.StepLR(optimizer, step_size=3, gamma=0.1)

model.train() # 设置模型为训练模式

for epoch in range(epochs):

running_loss = 0.0

correct = 0

total = 0

for i, data in enumerate(trainloader, 0):

inputs, labels = data

inputs, labels = inputs.to(device), labels.to(device)

optimizer.zero_grad()

outputs = model(inputs)

loss = criterion(outputs, labels)

loss.backward()

optimizer.step()

running_loss += loss.item()

# 计算准确率

_, predicted = torch.max(outputs.data, 1)

total += labels.size(0)

correct += (predicted == labels).sum().item()

if i % 100 == 99:

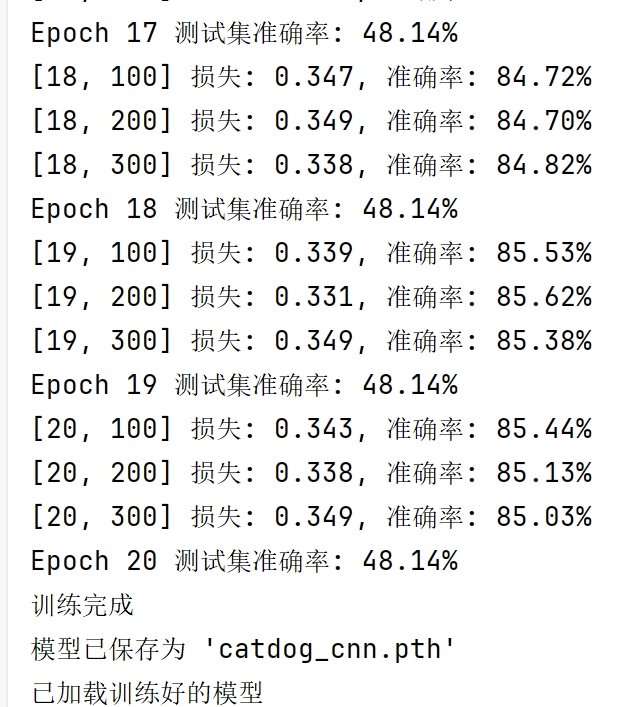

print(f'[{epoch + 1}, {i + 1}] 损失: {running_loss / 100:.3f}, 准确率: {100 * correct / total:.2f}%')

running_loss = 0.0

# 更新学习率

scheduler.step()

# 每个epoch结束后在测试集上评估

test_accuracy = evaluate_model(model)

print(f'Epoch {epoch + 1} 测试集准确率: {test_accuracy:.2f}%')

print("训练完成")

# 保存模型

torch.save(model.state_dict(), 'catdog_cnn.pth')

print("模型已保存为 'catdog_cnn.pth'")

# 训练模型

print("开始训练模型...")

train_model(model, epochs=20)

# 加载最佳模型

model.load_state_dict(torch.load('catdog_cnn.pth'))

model.eval()

print("已加载训练好的模型")

# Grad-CAM实现

class GradCAM:

def __init__(self, model, target_layer):

self.model = model

self.target_layer = target_layer

self.gradients = None

self.activations = None

self.register_hooks()

def register_hooks(self):

def forward_hook(module, input, output):

self.activations = output.detach()

def backward_hook(module, grad_input, grad_output):

self.gradients = grad_output[0].detach()

self.target_layer.register_forward_hook(forward_hook)

self.target_layer.register_backward_hook(backward_hook)

def generate_cam(self, input_image, target_class=None):

model_output = self.model(input_image)

if target_class is None:

target_class = torch.argmax(model_output, dim=1).item()

self.model.zero_grad()

one_hot = torch.zeros_like(model_output)

one_hot[0, target_class] = 1

model_output.backward(gradient=one_hot)

gradients = self.gradients

activations = self.activations

weights = torch.mean(gradients, dim=(2, 3), keepdim=True)

cam = torch.sum(weights * activations, dim=1, keepdim=True)

cam = F.relu(cam)

# 调整到输入图像大小 (224x224)

cam = F.interpolate(cam, size=(224, 224), mode='bilinear', align_corners=False)

cam = cam - cam.min()

cam = cam / cam.max() if cam.max() > 0 else cam

return cam.cpu().squeeze().numpy(), target_class

# 选择目标层进行Grad-CAM可视化

target_layer = model.conv3 # 使用最后一个卷积层

# 创建Grad-CAM实例

grad_cam = GradCAM(model, target_layer)

# 获取一个测试样本进行可视化

dataiter = iter(testloader)

images, labels = next(dataiter)

# 选择第一个图像进行可视化

image = images[0:1].to(device) # 保持batch维度

true_label = labels[0].item()

# 生成热图

heatmap, pred_class = grad_cam.generate_cam(image)

# 可视化结果

def visualize_gradcam(image, heatmap, true_label, pred_label, class_names):

# 将图像转换为numpy数组

image = image.cpu().squeeze().numpy()

image = np.transpose(image, (1, 2, 0))

# 反归一化

mean = np.array([0.485, 0.456, 0.406])

std = np.array([0.229, 0.224, 0.225])

image = std * image + mean

image = np.clip(image, 0, 1)

# 创建子图

fig, (ax1, ax2, ax3) = plt.subplots(1, 3, figsize=(15, 5))

# 显示原始图像

ax1.imshow(image)

ax1.set_title(f'原始图像\n真实类别: {class_names[true_label]}')

ax1.axis('off')

# 显示热图

ax2.imshow(heatmap, cmap='jet')

ax2.set_title('Grad-CAM热图')

ax2.axis('off')

# 显示叠加效果

heatmap_resized = np.uint8(255 * heatmap)

heatmap_colored = plt.cm.jet(heatmap_resized)[..., :3] # 只取RGB,忽略alpha

superimposed = 0.5 * image + 0.5 * heatmap_colored

ax3.imshow(superimposed)

ax3.set_title(f'叠加效果\n预测类别: {class_names[pred_class]}')

ax3.axis('off')

plt.tight_layout()

plt.show()

# 获取类别名称

class_names = train_dataset.classes

# 可视化Grad-CAM结果

visualize_gradcam(images[0], heatmap, true_label, pred_class, class_names)

我在自己的电脑上跑的,速度很慢,所以我只跑了20个epoch,接下来我会增加epoch继续对模型进行训练,看效果会不会好一点