第P6周:VGG-16算法-Pytorch实现人脸识别

🍺要求:

- 保存训练过程中的最佳模型权重

- 调用官方的VGG-16网络框架

🍻拔高(可选):

- 测试集准确率达到60%(难度有点大,但是这个过程可以学到不少)

- 手动搭建VGG-16网络框架

🏡我的环境:

- 语言环境:Python3.8

- 编译器:Jupyter Lab

- 深度学习环境:

- torch==2.2.2

- torchvision==0.17.2

- 数据:Monkeypox

一、 前期准备

1.1. 设置GPU

- 如果设备上支持GPU就使用GPU,否则使用CPU

- Mac上的GPU使用mps

import torch

import torch.nn as nn

import matplotlib.pyplot as plt

import torchvision

from torchvision import transforms, datasets

import os,PIL,pathlib,warnings

warnings.filterwarnings("ignore") #忽略警告信息

# this ensures that the current MacOS version is at least 12.3+

print(torch.backends.mps.is_available())

# this ensures that the current current PyTorch installation was built with MPS activated.

print(torch.backends.mps.is_built())

# 设置硬件设备,如果有GPU则使用,没有则使用cpu

device = torch.device("mps" if torch.backends.mps.is_available() else "cpu")

device # # 使用的是GPU

True

True

device(type='mps')

1.2. 导入数据

import os,PIL,random,pathlib

data_dir = './data/p6/'

data_dir = pathlib.Path(data_dir)

data_paths = list(data_dir.glob('*'))

classeNames = [str(path).split("/")[-1] for path in data_paths]

classeNames

['Robert Downey Jr',

'Brad Pitt',

'Leonardo DiCaprio',

'Jennifer Lawrence',

'Tom Cruise',

'Hugh Jackman',

'Angelina Jolie',

'Johnny Depp',

'Tom Hanks',

'Denzel Washington',

'Kate Winslet',

'Scarlett Johansson',

'Will Smith',

'Natalie Portman',

'Nicole Kidman',

'Sandra Bullock',

'Megan Fox']

- 第一步:使用

pathlib.Path()函数将字符串类型的文件夹路径转换为pathlib.Path对象。 - 第二步:使用

glob()方法获取data_dir路径下的所有文件路径,并以列表形式存储在data_paths中。 - 第三步:通过

split()函数对data_paths中的每个文件路径执行分割操作,获得各个文件所属的类别名称,并存储在classeNames中 - 第四步:打印

classeNames列表,显示每个文件所属的类别名称。

# 关于transforms.Compose的更多介绍可以参考:https://blog.csdn.net/qq_38251616/article/details/124878863

train_transforms = transforms.Compose([

transforms.Resize([224, 224]), # 将输入图片resize成统一尺寸

# transforms.RandomHorizontalFlip(), # 随机水平翻转

transforms.ToTensor(), # 将PIL Image或numpy.ndarray转换为tensor,并归一化到[0,1]之间

transforms.Normalize( # 标准化处理-->转换为标准正太分布(高斯分布),使模型更容易收敛

mean=[0.485, 0.456, 0.406],

std=[0.229, 0.224, 0.225]) # 其中 mean=[0.485,0.456,0.406]与std=[0.229,0.224,0.225] 从数据集中随机抽样计算得到的。

])

total_data = datasets.ImageFolder("./data/p6",transform=train_transforms)

total_data

Dataset ImageFolder

Number of datapoints: 1800

Root location: ./data/p6

StandardTransform

Transform: Compose(

Resize(size=[224, 224], interpolation=bilinear, max_size=None, antialias=True)

ToTensor()

Normalize(mean=[0.485, 0.456, 0.406], std=[0.229, 0.224, 0.225])

)

total_data.class_to_idx

{'Angelina Jolie': 0,

'Brad Pitt': 1,

'Denzel Washington': 2,

'Hugh Jackman': 3,

'Jennifer Lawrence': 4,

'Johnny Depp': 5,

'Kate Winslet': 6,

'Leonardo DiCaprio': 7,

'Megan Fox': 8,

'Natalie Portman': 9,

'Nicole Kidman': 10,

'Robert Downey Jr': 11,

'Sandra Bullock': 12,

'Scarlett Johansson': 13,

'Tom Cruise': 14,

'Tom Hanks': 15,

'Will Smith': 16}

total_data.class_to_idx是一个存储了数据集类别和对应索引的字典。在PyTorch的ImageFolder数据加载器中,根据数据集文件夹的组织结构,每个文件夹代表一个类别,class_to_idx字典将每个类别名称映射为一个数字索引。

具体来说,如果数据集文件夹包含两个子文件夹,比如 Monkeypox 和 Others,class_to_idx字典将返回类似以下的映射关系:{'Monkeypox': 0, 'Others': 1}

1.3. 划分数据集

train_size = int(0.8 * len(total_data))

test_size = len(total_data) - train_size

train_dataset, test_dataset = torch.utils.data.random_split(total_data, [train_size, test_size])

train_dataset, test_dataset

(<torch.utils.data.dataset.Subset at 0x14752ff10>,

<torch.utils.data.dataset.Subset at 0x147695730>)

batch_size = 32

train_dl = torch.utils.data.DataLoader(train_dataset,

batch_size=batch_size,

shuffle=True,

num_workers=1)

test_dl = torch.utils.data.DataLoader(test_dataset,

batch_size=batch_size,

shuffle=True,

num_workers=1)

for X, y in test_dl:

print("Shape of X [N, C, H, W]: ", X.shape)

print("Shape of y: ", y.shape, y.dtype)

break

Shape of X [N, C, H, W]: torch.Size([32, 3, 224, 224])

Shape of y: torch.Size([32]) torch.int64

⭐ torch.utils.data.DataLoader() 参数详解

torch.utils.data.DataLoader 是 PyTorch 中用于加载和管理数据的一个实用工具类。它允许你以小批次的方式迭代你的数据集,这对于训练神经网络和其他机器学习任务非常有用。DataLoader 构造函数接受多个参数,下面是一些常用的参数及其解释:

- dataset(必需参数):这是你的数据集对象,通常是

torch.utils.data.Dataset的子类,它包含了你的数据样本。 - batch_size(可选参数):指定每个小批次中包含的样本数。默认值为 1。

- shuffle(可选参数):如果设置为 True,则在每个 epoch 开始时对数据进行洗牌,以随机打乱样本的顺序。这对于训练数据的随机性很重要,以避免模型学习到数据的顺序性。默认值为 False。

- num_workers(可选参数):用于数据加载的子进程数量。通常,将其设置为大于 0 的值可以加快数据加载速度,特别是当数据集很大时。默认值为 0,表示在主进程中加载数据。

- pin_memory(可选参数):如果设置为 True,则数据加载到 GPU 时会将数据存储在 CUDA 的锁页内存中,这可以加速数据传输到 GPU。默认值为 False。

- drop_last(可选参数):如果设置为 True,则在最后一个小批次可能包含样本数小于

batch_size时,丢弃该小批次。这在某些情况下很有用,以确保所有小批次具有相同的大小。默认值为 False。 - timeout(可选参数):如果设置为正整数,它定义了每个子进程在等待数据加载器传递数据时的超时时间(以秒为单位)。这可以用于避免子进程卡住的情况。默认值为 0,表示没有超时限制。

- worker_init_fn(可选参数):一个可选的函数,用于初始化每个子进程的状态。这对于设置每个子进程的随机种子或其他初始化操作很有用。

二、调用官方的VGG-16模型

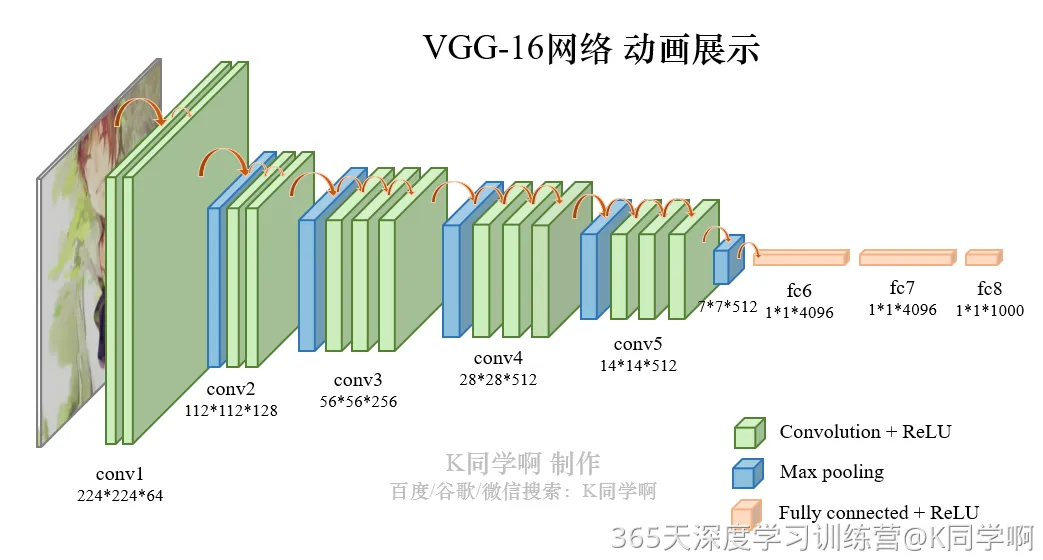

VGG-16(Visual Geometry Group-16)是由牛津大学视觉几何组(Visual Geometry Group)提出的一种深度卷积神经网络架构,用于图像分类和对象识别任务。VGG-16在2014年被提出,是VGG系列中的一种。VGG-16之所以备受关注,是因为它在ImageNet图像识别竞赛中取得了很好的成绩,展示了其在大规模图像识别任务中的有效性。

以下是VGG-16的主要特点:

- 深度:VGG-16由13个卷积层和3个全连接层组成,因此具有相对较深的网络结构。这种深度有助于网络学习到更加抽象和复杂的特征。

- 卷积层的设计:VGG-16的卷积层全部采用3x3的卷积核和步长为1的卷积操作,同时在卷积层之后都接有ReLU激活函数。这种设计的好处在于,通过堆叠多个较小的卷积核,可以提高网络的非线性建模能力,同时减少了参数数量,从而降低了过拟合的风险。

- 池化层:在卷积层之后,VGG-16使用最大池化层来减少特征图的空间尺寸,帮助提取更加显著的特征并减少计算量。

- 全连接层:VGG-16在卷积层之后接有3个全连接层,最后一个全连接层输出与类别数相对应的向量,用于进行分类。

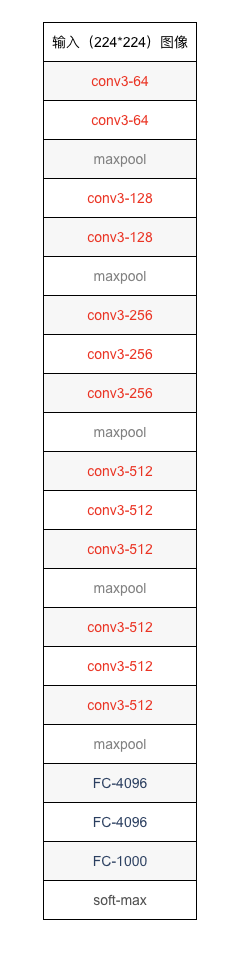

VGG-16结构说明:

- 13个卷积层(Convolutional Layer),分别用blockX_convX表示;

- 3个全连接层(Fully connected Layer),用classifier表示;

- 5个池化层(Pool layer)。

VGG-16包含了16个隐藏层(13个卷积层和3个全连接层),故称为VGG-16

VGG-16模型结构

from torchvision.models import vgg16

# 加载预训练模型,并且对模型进行微调

print("""Downloading: "https://download.pytorch.org/models/vgg16-397923af.pth" to /Users/henry/.cache/torch/hub/checkpoints/vgg16-397923af.pth""")

model = vgg16(pretrained = True).to(device) # 加载预训练的vgg16模型

for param in model.parameters():

param.requires_grad = False # 冻结模型的参数,这样子在训练的时候只训练最后一层的参数

# 修改classifier模块的第6层(即:(6): Linear(in_features=4096, out_features=2, bias=True))

# 注意查看我们下方打印出来的模型

model.classifier._modules['6'] = nn.Linear(4096,len(classeNames)) # 修改vgg16模型中最后一层全连接层,输出目标类别个数

model.to(device)

model

Downloading: "https://download.pytorch.org/models/vgg16-397923af.pth" to /Users/henry/.cache/torch/hub/checkpoints/vgg16-397923af.pth

VGG(

(features): Sequential(

(0): Conv2d(3, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(1): ReLU(inplace=True)

(2): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(3): ReLU(inplace=True)

(4): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

(5): Conv2d(64, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(6): ReLU(inplace=True)

(7): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(8): ReLU(inplace=True)

(9): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

(10): Conv2d(128, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(11): ReLU(inplace=True)

(12): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(13): ReLU(inplace=True)

(14): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(15): ReLU(inplace=True)

(16): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

(17): Conv2d(256, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(18): ReLU(inplace=True)

(19): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(20): ReLU(inplace=True)

(21): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(22): ReLU(inplace=True)

(23): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

(24): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(25): ReLU(inplace=True)

(26): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(27): ReLU(inplace=True)

(28): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(29): ReLU(inplace=True)

(30): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

)

(avgpool): AdaptiveAvgPool2d(output_size=(7, 7))

(classifier): Sequential(

(0): Linear(in_features=25088, out_features=4096, bias=True)

(1): ReLU(inplace=True)

(2): Dropout(p=0.5, inplace=False)

(3): Linear(in_features=4096, out_features=4096, bias=True)

(4): ReLU(inplace=True)

(5): Dropout(p=0.5, inplace=False)

(6): Linear(in_features=4096, out_features=17, bias=True)

)

)

搭建模型

import torch

import torch.nn as nn

# 定义 VGG16 模型

class VGG16(nn.Module):

def __init__(self, num_classes=len(classeNames)):

super(VGG16, self).__init__()

self.features = nn.Sequential(

# 第一段卷积层

nn.Conv2d(3, 64, kernel_size=3, padding=1),

nn.ReLU(inplace=True),

nn.Conv2d(64, 64, kernel_size=3, padding=1),

nn.ReLU(inplace=True),

nn.MaxPool2d(kernel_size=2, stride=2),

# 第二段卷积层

nn.Conv2d(64, 128, kernel_size=3, padding=1),

nn.ReLU(inplace=True),

nn.Conv2d(128, 128, kernel_size=3, padding=1),

nn.ReLU(inplace=True),

nn.MaxPool2d(kernel_size=2, stride=2),

# 第三段卷积层

nn.Conv2d(128, 256, kernel_size=3, padding=1),

nn.ReLU(inplace=True),

nn.Conv2d(256, 256, kernel_size=3, padding=1),

nn.ReLU(inplace=True),

nn.Conv2d(256, 256, kernel_size=3, padding=1),

nn.ReLU(inplace=True),

nn.MaxPool2d(kernel_size=2, stride=2),

# 第四段卷积层

nn.Conv2d(256, 512, kernel_size=3, padding=1),

nn.ReLU(inplace=True),

nn.Conv2d(512, 512, kernel_size=3, padding=1),

nn.ReLU(inplace=True),

nn.Conv2d(512, 512, kernel_size=3, padding=1),

nn.ReLU(inplace=True),

nn.MaxPool2d(kernel_size=2, stride=2),

# 第五段卷积层

nn.Conv2d(512, 512, kernel_size=3, padding=1),

nn.ReLU(inplace=True),

nn.Conv2d(512, 512, kernel_size=3, padding=1),

nn.ReLU(inplace=True),

nn.Conv2d(512, 512, kernel_size=3, padding=1),

nn.ReLU(inplace=True),

nn.MaxPool2d(kernel_size=2, stride=2)

)

self.classifier = nn.Sequential(

nn.Linear(512 * 7 * 7, 4096),

nn.ReLU(inplace=True),

nn.Dropout(p=0.5, inplace=False),

nn.Linear(4096, 4096),

nn.ReLU(inplace=True),

nn.Dropout(p=0.5, inplace=False),

nn.Linear(4096, num_classes)

)

def forward(self, x):

x = self.features(x)

x = torch.flatten(x, 1)

x = self.classifier(x)

return x

# 创建 VGG16 模型实例

model_vgg16 = VGG16()

# 打印模型结构

print(model_vgg16)

VGG16(

(features): Sequential(

(0): Conv2d(3, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(1): ReLU(inplace=True)

(2): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(3): ReLU(inplace=True)

(4): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

(5): Conv2d(64, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(6): ReLU(inplace=True)

(7): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(8): ReLU(inplace=True)

(9): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

(10): Conv2d(128, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(11): ReLU(inplace=True)

(12): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(13): ReLU(inplace=True)

(14): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(15): ReLU(inplace=True)

(16): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

(17): Conv2d(256, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(18): ReLU(inplace=True)

(19): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(20): ReLU(inplace=True)

(21): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(22): ReLU(inplace=True)

(23): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

(24): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(25): ReLU(inplace=True)

(26): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(27): ReLU(inplace=True)

(28): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(29): ReLU(inplace=True)

(30): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

)

(classifier): Sequential(

(0): Linear(in_features=25088, out_features=4096, bias=True)

(1): ReLU(inplace=True)

(2): Dropout(p=0.5, inplace=False)

(3): Linear(in_features=4096, out_features=4096, bias=True)

(4): ReLU(inplace=True)

(5): Dropout(p=0.5, inplace=False)

(6): Linear(in_features=4096, out_features=17, bias=True)

)

)

from torchinfo import summary

summary(model)

=================================================================

Layer (type:depth-idx) Param #

=================================================================

VGG --

├─Sequential: 1-1 --

│ └─Conv2d: 2-1 (1,792)

│ └─ReLU: 2-2 --

│ └─Conv2d: 2-3 (36,928)

│ └─ReLU: 2-4 --

│ └─MaxPool2d: 2-5 --

│ └─Conv2d: 2-6 (73,856)

│ └─ReLU: 2-7 --

│ └─Conv2d: 2-8 (147,584)

│ └─ReLU: 2-9 --

│ └─MaxPool2d: 2-10 --

│ └─Conv2d: 2-11 (295,168)

│ └─ReLU: 2-12 --

│ └─Conv2d: 2-13 (590,080)

│ └─ReLU: 2-14 --

│ └─Conv2d: 2-15 (590,080)

│ └─ReLU: 2-16 --

│ └─MaxPool2d: 2-17 --

│ └─Conv2d: 2-18 (1,180,160)

│ └─ReLU: 2-19 --

│ └─Conv2d: 2-20 (2,359,808)

│ └─ReLU: 2-21 --

│ └─Conv2d: 2-22 (2,359,808)

│ └─ReLU: 2-23 --

│ └─MaxPool2d: 2-24 --

│ └─Conv2d: 2-25 (2,359,808)

│ └─ReLU: 2-26 --

│ └─Conv2d: 2-27 (2,359,808)

│ └─ReLU: 2-28 --

│ └─Conv2d: 2-29 (2,359,808)

│ └─ReLU: 2-30 --

│ └─MaxPool2d: 2-31 --

├─AdaptiveAvgPool2d: 1-2 --

├─Sequential: 1-3 --

│ └─Linear: 2-32 (102,764,544)

│ └─ReLU: 2-33 --

│ └─Dropout: 2-34 --

│ └─Linear: 2-35 (16,781,312)

│ └─ReLU: 2-36 --

│ └─Dropout: 2-37 --

│ └─Linear: 2-38 69,649

=================================================================

Total params: 134,330,193

Trainable params: 69,649

Non-trainable params: 134,260,544

=================================================================

三、 训练模型

3.1. 编写训练函数

optimizer.zero_grad()

函数会遍历模型的所有参数,通过内置方法截断反向传播的梯度流,再将每个参数的梯度值设为0,即上一次的梯度记录被清空。loss.backward()

PyTorch的反向传播(即tensor.backward())是通过autograd包来实现的,autograd包会根据tensor进行过的数学运算来自动计算其对应的梯度。具体来说,

torch.tensor是autograd包的基础类,如果你设置tensor的requires_grads为True,就会开始跟踪这个tensor上面的所有运算,如果你做完运算后使用tensor.backward(),所有的梯度就会自动运算,tensor的梯度将会累加到它的.grad属性里面去。更具体地说,损失函数loss是由模型的所有权重w经过一系列运算得到的,若某个w的

requires_grads为True,则w的所有上层参数(后面层的权重w)的.grad_fn属性中就保存了对应的运算,然后在使用loss.backward()后,会一层层的反向传播计算每个w的梯度值,并保存到该w的.grad属性中。如果没有进行

tensor.backward()的话,梯度值将会是None,因此loss.backward()要写在optimizer.step()之前。optimizer.step()

step()函数的作用是执行一次优化步骤,通过梯度下降法来更新参数的值。因为梯度下降是基于梯度的,所以在执行optimizer.step()函数前应先执行loss.backward()函数来计算梯度。注意:optimizer只负责通过梯度下降进行优化,而不负责产生梯度,梯度是tensor.backward()方法产生的。

# 训练循环

def train(dataloader, model, loss_fn, optimizer):

size = len(dataloader.dataset) # 训练集的大小

num_batches = len(dataloader) # 批次数目, (size/batch_size,向上取整)

train_loss, train_acc = 0, 0 # 初始化训练损失和正确率

for X, y in dataloader: # 获取图片及其标签

X, y = X.to(device), y.to(device)

# 计算预测误差

pred = model(X) # 网络输出

loss = loss_fn(pred, y) # 计算网络输出和真实值之间的差距,targets为真实值,计算二者差值即为损失

# 反向传播

optimizer.zero_grad() # grad属性归零

loss.backward() # 反向传播

optimizer.step() # 每一步自动更新

# 记录acc与loss

train_acc += (pred.argmax(1) == y).type(torch.float).sum().item()

train_loss += loss.item()

train_acc /= size

train_loss /= num_batches

return train_acc, train_loss

pred.argmax(1)返回数组 pred 在第一个轴(即行)上最大值所在的索引。这通常用于多类分类问题中,其中 pred 是一个包含预测概率的二维数组,每行表示一个样本的预测概率分布。(pred.argmax(1) == y)是一个布尔值,其中等号是否成立代表对应样本的预测是否正确(True 表示正确,False 表示错误)。.type(torch.float)是将布尔数组的数据类型转换为浮点数类型,即将 True 转换为 1.0,将 False 转换为 0.0。.sum()是对数组中的元素求和,计算出预测正确的样本数量。.item()将求和结果转换为标量值,以便在 Python 中使用或打印。

(pred.argmax(1) == y).type(torch.float).sum().item() 表示计算预测正确的样本数量,并将其作为一个标量值返回。这通常用于评估分类模型的准确率或计算分类问题的正确预测数量。

3.2. 编写测试函数

测试函数和训练函数大致相同,但是由于不进行梯度下降对网络权重进行更新,所以不需要传入优化器

def test (dataloader, model, loss_fn):

size = len(dataloader.dataset) # 测试集的大小

num_batches = len(dataloader) # 批次数目, (size/batch_size,向上取整)

test_loss, test_acc = 0, 0

# 当不进行训练时,停止梯度更新,节省计算内存消耗

with torch.no_grad():

for imgs, target in dataloader:

imgs, target = imgs.to(device), target.to(device)

# 计算loss

target_pred = model(imgs)

loss = loss_fn(target_pred, target)

test_loss += loss.item()

test_acc += (target_pred.argmax(1) == target).type(torch.float).sum().item()

test_acc /= size

test_loss /= num_batches

return test_acc, test_loss

3.3 设置动态学习率

# def adjust_learning_rate(optimizer, epoch, start_lr):

# # 每 2 个epoch衰减到原来的 0.98

# lr = start_lr * (0.92 ** (epoch // 2))

# for param_group in optimizer.param_groups:

# param_group['lr'] = lr

learn_rate = 1e-4 # 初始学习率

# optimizer = torch.optim.SGD(model.parameters(), lr=learn_rate)

✨调用官方动态学习率接口

与上面方法是等价的

# 调用官方动态学习率接口时使用

lambda1 = lambda epoch: 0.92 ** (epoch // 4)

optimizer = torch.optim.SGD(model.parameters(), lr=learn_rate)

scheduler = torch.optim.lr_scheduler.LambdaLR(optimizer, lr_lambda=lambda1) #选定调整方法

👉调用官方接口示例:

该代码块仅为代码讲解示例,不是整体程序的一部分

model = [torch.nn.Parameter(torch.randn(2, 2, requires_grad=True))]

optimizer = SGD(model, 0.1)

scheduler = ExponentialLR(optimizer, gamma=0.9)

for epoch in range(20):

for input, target in dataset:

optimizer.zero_grad()

output = model(input)

loss = loss_fn(output, target)

loss.backward()

optimizer.step()

scheduler.step()

3.4. 正式训练

model.train()、model.eval()训练营往期文章中有详细的介绍。

请注意观察我是如何保存最佳模型,与TensorFlow2的保存方式有何异同。

import copy

loss_fn = nn.CrossEntropyLoss() # 创建损失函数

epochs = 40

train_loss = []

train_acc = []

test_loss = []

test_acc = []

best_acc = 0 # 设置一个最佳准确率,作为最佳模型的判别指标

for epoch in range(epochs):

# 更新学习率(使用自定义学习率时使用)

# adjust_learning_rate(optimizer, epoch, learn_rate)

model.train()

epoch_train_acc, epoch_train_loss = train(train_dl, model, loss_fn, optimizer)

scheduler.step() # 更新学习率(调用官方动态学习率接口时使用)

model.eval()

epoch_test_acc, epoch_test_loss = test(test_dl, model, loss_fn)

# 保存最佳模型到 best_model

if epoch_test_acc > best_acc:

best_acc = epoch_test_acc

best_model = copy.deepcopy(model)

train_acc.append(epoch_train_acc)

train_loss.append(epoch_train_loss)

test_acc.append(epoch_test_acc)

test_loss.append(epoch_test_loss)

# 获取当前的学习率

lr = optimizer.state_dict()['param_groups'][0]['lr']

template = ('Epoch:{:2d}, Train_acc:{:.1f}%, Train_loss:{:.3f}, Test_acc:{:.1f}%, Test_loss:{:.3f}, Lr:{:.2E}')

print(template.format(epoch+1, epoch_train_acc*100, epoch_train_loss,

epoch_test_acc*100, epoch_test_loss, lr))

# 保存最佳模型到文件中

PATH = './best_model.pth' # 保存的参数文件名

torch.save(model.state_dict(), PATH)

print('Done')

Epoch: 1, Train_acc:5.1%, Train_loss:2.935, Test_acc:4.7%, Test_loss:2.887, Lr:1.00E-04

Epoch: 2, Train_acc:7.3%, Train_loss:2.912, Test_acc:4.7%, Test_loss:2.847, Lr:1.00E-04

Epoch: 3, Train_acc:7.6%, Train_loss:2.863, Test_acc:6.7%, Test_loss:2.831, Lr:1.00E-04

Epoch: 4, Train_acc:9.2%, Train_loss:2.828, Test_acc:10.0%, Test_loss:2.805, Lr:9.20E-05

Epoch: 5, Train_acc:9.9%, Train_loss:2.809, Test_acc:11.9%, Test_loss:2.770, Lr:9.20E-05

Epoch: 6, Train_acc:12.3%, Train_loss:2.778, Test_acc:12.5%, Test_loss:2.756, Lr:9.20E-05

Epoch: 7, Train_acc:12.7%, Train_loss:2.766, Test_acc:13.1%, Test_loss:2.743, Lr:9.20E-05

Epoch: 8, Train_acc:13.1%, Train_loss:2.733, Test_acc:13.6%, Test_loss:2.714, Lr:8.46E-05

Epoch: 9, Train_acc:14.7%, Train_loss:2.719, Test_acc:14.4%, Test_loss:2.714, Lr:8.46E-05

Epoch:10, Train_acc:14.2%, Train_loss:2.707, Test_acc:14.2%, Test_loss:2.692, Lr:8.46E-05

Epoch:11, Train_acc:15.9%, Train_loss:2.677, Test_acc:14.4%, Test_loss:2.671, Lr:8.46E-05

Epoch:12, Train_acc:16.1%, Train_loss:2.664, Test_acc:14.7%, Test_loss:2.663, Lr:7.79E-05

Epoch:13, Train_acc:16.5%, Train_loss:2.652, Test_acc:15.0%, Test_loss:2.646, Lr:7.79E-05

Epoch:14, Train_acc:16.7%, Train_loss:2.631, Test_acc:15.0%, Test_loss:2.625, Lr:7.79E-05

Epoch:15, Train_acc:16.7%, Train_loss:2.616, Test_acc:15.0%, Test_loss:2.646, Lr:7.79E-05

Epoch:16, Train_acc:17.4%, Train_loss:2.611, Test_acc:15.3%, Test_loss:2.618, Lr:7.16E-05

Epoch:17, Train_acc:17.7%, Train_loss:2.598, Test_acc:15.3%, Test_loss:2.604, Lr:7.16E-05

Epoch:18, Train_acc:17.5%, Train_loss:2.588, Test_acc:15.6%, Test_loss:2.603, Lr:7.16E-05

Epoch:19, Train_acc:18.6%, Train_loss:2.571, Test_acc:15.8%, Test_loss:2.585, Lr:7.16E-05

Epoch:20, Train_acc:17.3%, Train_loss:2.570, Test_acc:15.8%, Test_loss:2.581, Lr:6.59E-05

Epoch:21, Train_acc:17.2%, Train_loss:2.572, Test_acc:15.8%, Test_loss:2.573, Lr:6.59E-05

Epoch:22, Train_acc:19.7%, Train_loss:2.521, Test_acc:16.7%, Test_loss:2.576, Lr:6.59E-05

Epoch:23, Train_acc:18.4%, Train_loss:2.540, Test_acc:16.7%, Test_loss:2.564, Lr:6.59E-05

Epoch:24, Train_acc:18.1%, Train_loss:2.528, Test_acc:16.9%, Test_loss:2.554, Lr:6.06E-05

Epoch:25, Train_acc:19.3%, Train_loss:2.516, Test_acc:16.9%, Test_loss:2.542, Lr:6.06E-05

Epoch:26, Train_acc:19.4%, Train_loss:2.513, Test_acc:17.2%, Test_loss:2.544, Lr:6.06E-05

Epoch:27, Train_acc:20.6%, Train_loss:2.507, Test_acc:17.5%, Test_loss:2.539, Lr:6.06E-05

Epoch:28, Train_acc:19.0%, Train_loss:2.504, Test_acc:17.5%, Test_loss:2.528, Lr:5.58E-05

Epoch:29, Train_acc:19.7%, Train_loss:2.503, Test_acc:17.8%, Test_loss:2.512, Lr:5.58E-05

Epoch:30, Train_acc:19.3%, Train_loss:2.479, Test_acc:18.3%, Test_loss:2.516, Lr:5.58E-05

Epoch:31, Train_acc:19.4%, Train_loss:2.482, Test_acc:18.3%, Test_loss:2.519, Lr:5.58E-05

Epoch:32, Train_acc:20.3%, Train_loss:2.481, Test_acc:18.1%, Test_loss:2.518, Lr:5.13E-05

Epoch:33, Train_acc:19.2%, Train_loss:2.486, Test_acc:18.1%, Test_loss:2.500, Lr:5.13E-05

Epoch:34, Train_acc:21.1%, Train_loss:2.467, Test_acc:18.1%, Test_loss:2.503, Lr:5.13E-05

Epoch:35, Train_acc:20.8%, Train_loss:2.461, Test_acc:18.3%, Test_loss:2.488, Lr:5.13E-05

Epoch:36, Train_acc:20.1%, Train_loss:2.459, Test_acc:18.3%, Test_loss:2.478, Lr:4.72E-05

Epoch:37, Train_acc:21.4%, Train_loss:2.438, Test_acc:18.6%, Test_loss:2.487, Lr:4.72E-05

Epoch:38, Train_acc:19.7%, Train_loss:2.451, Test_acc:18.3%, Test_loss:2.483, Lr:4.72E-05

Epoch:39, Train_acc:20.8%, Train_loss:2.434, Test_acc:18.3%, Test_loss:2.460, Lr:4.72E-05

Epoch:40, Train_acc:19.1%, Train_loss:2.434, Test_acc:18.1%, Test_loss:2.468, Lr:4.34E-05

Done

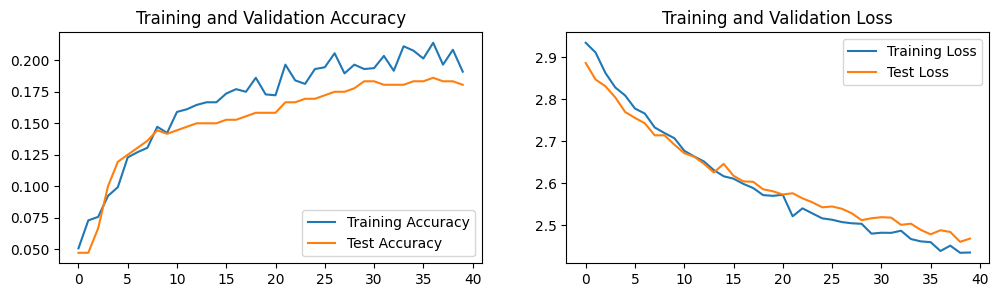

四、 结果可视化

4.1. 可视化

import matplotlib.pyplot as plt

#隐藏警告

import warnings

warnings.filterwarnings("ignore") #忽略警告信息

# plt.rcParams['font.sans-serif'] = ['SimHei'] # 用来正常显示中文标签

plt.rcParams['axes.unicode_minus'] = False # 用来正常显示负号

plt.rcParams['figure.dpi'] = 100 #分辨率

epochs_range = range(epochs)

plt.figure(figsize=(12, 3))

plt.subplot(1, 2, 1)

plt.plot(epochs_range, train_acc, label='Training Accuracy')

plt.plot(epochs_range, test_acc, label='Test Accuracy')

plt.legend(loc='lower right')

plt.title('Training and Validation Accuracy')

plt.subplot(1, 2, 2)

plt.plot(epochs_range, train_loss, label='Training Loss')

plt.plot(epochs_range, test_loss, label='Test Loss')

plt.legend(loc='upper right')

plt.title('Training and Validation Loss')

plt.show()

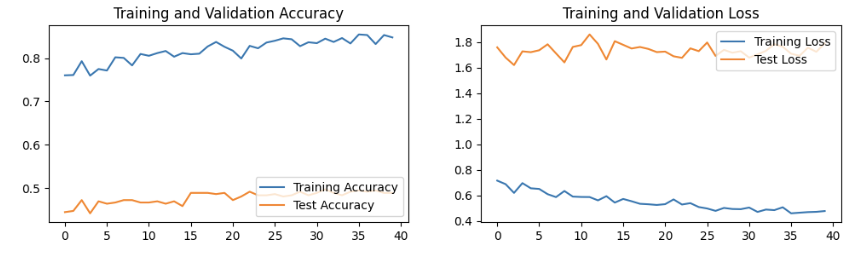

📌调整代码,使测试集准确率达到60%。

learn_rate = 9e-4 # 初始学习率

lambda1 = lambda epoch: 0.9 ** (epoch // 4)

optimizer = torch.optim.Adam(model.parameters(), lr=learn_rate)

scheduler = torch.optim.lr_scheduler.LambdaLR(optimizer, lr_lambda=lambda1) #选定调整方法

print('Done')

Epoch: 1, Train_acc:76.0%, Train_loss:0.716, Test_acc:44.4%, Test_loss:1.758, Lr:9.00E-04

Epoch: 2, Train_acc:76.1%, Train_loss:0.688, Test_acc:44.7%, Test_loss:1.678, Lr:8.10E-04

Epoch: 3, Train_acc:79.3%, Train_loss:0.620, Test_acc:47.2%, Test_loss:1.619, Lr:8.10E-04

Epoch: 4, Train_acc:76.0%, Train_loss:0.696, Test_acc:44.2%, Test_loss:1.726, Lr:7.29E-04

Epoch: 5, Train_acc:77.5%, Train_loss:0.656, Test_acc:46.9%, Test_loss:1.720, Lr:7.29E-04

Epoch: 6, Train_acc:77.2%, Train_loss:0.651, Test_acc:46.4%, Test_loss:1.735, Lr:6.56E-04

Epoch: 7, Train_acc:80.2%, Train_loss:0.610, Test_acc:46.7%, Test_loss:1.782, Lr:6.56E-04

Epoch: 8, Train_acc:80.1%, Train_loss:0.587, Test_acc:47.2%, Test_loss:1.711, Lr:5.90E-04

Epoch: 9, Train_acc:78.3%, Train_loss:0.635, Test_acc:47.2%, Test_loss:1.640, Lr:5.90E-04

Epoch:10, Train_acc:81.0%, Train_loss:0.591, Test_acc:46.7%, Test_loss:1.761, Lr:5.31E-04

Epoch:11, Train_acc:80.6%, Train_loss:0.588, Test_acc:46.7%, Test_loss:1.775, Lr:5.31E-04

Epoch:12, Train_acc:81.2%, Train_loss:0.587, Test_acc:46.9%, Test_loss:1.859, Lr:4.78E-04

...

Epoch:37, Train_acc:85.3%, Train_loss:0.465, Test_acc:49.2%, Test_loss:1.692, Lr:1.35E-04

Epoch:38, Train_acc:83.3%, Train_loss:0.470, Test_acc:49.4%, Test_loss:1.755, Lr:1.22E-04

Epoch:39, Train_acc:85.3%, Train_loss:0.472, Test_acc:48.9%, Test_loss:1.723, Lr:1.22E-04

Epoch:40, Train_acc:84.8%, Train_loss:0.478, Test_acc:48.9%, Test_loss:1.789, Lr:1.09E-04

Done

4.2. 指定图片进行预测

from PIL import Image

classes = list(total_data.class_to_idx)

def predict_one_image(image_path, model, transform, classes):

test_img = Image.open(image_path).convert('RGB')

plt.imshow(test_img) # 展示预测的图片

test_img = transform(test_img)

img = test_img.to(device).unsqueeze(0)

model.eval()

output = model(img)

_,pred = torch.max(output,1)

pred_class = classes[pred]

print(f'预测结果是:{pred_class}')

# 预测训练集中的某张照片

predict_one_image(image_path='./data/p6/Angelina Jolie/001_fe3347c0.jpg',

model=model,

transform=train_transforms,

classes=classes)

预测结果是:Angelina Jolie

3. 模型评估

best_model.eval()

epoch_test_acc, epoch_test_loss = test(test_dl, best_model, loss_fn)

print(epoch_test_acc, epoch_test_loss)

0.49722222222222223 1.7509519656499226